# index.html.md

# NVIDIA NIM Microservices

## Natural Language Processing

NeMo Retriever

Get access to state-of-the-art models for building text Q&A retrieval pipelines with high accuracy.

Text Embedding

Text Reranking

# index.html.md

# Local

Choose your preferred installation method for running RAPIDS

Conda

Install RAPIDS using conda

Docker

Install RAPIDS using Docker

pip

Install RAPIDS using pip

WSL2

Install RAPIDS on Windows using Windows Subsystem for Linux version 2 (WSL2)

# index.html.md

# HPC

RAPIDS works extremely well in traditional HPC (High Performance Computing) environments where GPUs are often co-located with accelerated networking hardware such as InfiniBand. Deploying on HPC often means using queue management systems such as SLURM, LSF, PBS, etc.

## SLURM

#### WARNING

This is a legacy page and may contain outdated information. We are working hard to update our documentation with the latest and greatest information, thank you for bearing with us.

If you are unfamiliar with SLURM or need a refresher, we recommend the [quickstart guide](https://slurm.schedmd.com/quickstart.html).

Depending on how your nodes are configured, additional settings may be required such as defining the number of GPUs `(--gpus)` desired or the number of gpus per node `(--gpus-per-node)`.

In the following example, we assume each allocation runs on a DGX1 with access to all eight GPUs.

### Start Scheduler

First, start the scheduler with the following SLURM script. This and the following scripts can deployed with `salloc` for interactive usage or `sbatch` for batched run.

```bash

#!/usr/bin/env bash

#SBATCH -J dask-scheduler

#SBATCH -n 1

#SBATCH -t 00:10:00

module load cuda/11.0.3

CONDA_ROOT=/nfs-mount/user/miniconda3

source $CONDA_ROOT/etc/profile.d/conda.sh

conda activate rapids

LOCAL_DIRECTORY=/nfs-mount/dask-local-directory

mkdir $LOCAL_DIRECTORY

CUDA_VISIBLE_DEVICES=0 dask-scheduler \

--protocol tcp \

--scheduler-file "$LOCAL_DIRECTORY/dask-scheduler.json" &

dask-cuda-worker \

--rmm-pool-size 14GB \

--scheduler-file "$LOCAL_DIRECTORY/dask-scheduler.json"

```

Notice that we configure the scheduler to write a `scheduler-file` to a NFS accessible location. This file contains metadata about the scheduler and will

include the IP address and port for the scheduler. The file will serve as input to the workers informing them what address and port to connect.

The scheduler doesn’t need the whole node to itself so we can also start a worker on this node to fill out the unused resources.

### Start Dask CUDA Workers

Next start the other [dask-cuda workers](https://docs.rapids.ai/api/dask-cuda/nightly/). Dask-CUDA extends the traditional Dask `Worker` class with specific options and enhancements for GPU environments. Unlike the scheduler and client, the workers script should be scalable and allow the users to tune how many workers are created.

For example, we can scale the number of nodes to 3: `sbatch/salloc -N3 dask-cuda-worker.script` . In this case, because we have 8 GPUs per node and we have 3 nodes,

our job will have 24 workers.

```bash

#!/usr/bin/env bash

#SBATCH -J dask-cuda-workers

#SBATCH -t 00:10:00

module load cuda/11.0.3

CONDA_ROOT=/nfs-mount/miniconda3

source $CONDA_ROOT/etc/profile.d/conda.sh

conda activate rapids

LOCAL_DIRECTORY=/nfs-mount/dask-local-directory

mkdir $LOCAL_DIRECTORY

dask-cuda-worker \

--rmm-pool-size 14GB \

--scheduler-file "$LOCAL_DIRECTORY/dask-scheduler.json"

```

### cuDF Example Workflow

Lastly, we can now run a job on the established Dask Cluster.

```bash

#!/usr/bin/env bash

#SBATCH -J dask-client

#SBATCH -n 1

#SBATCH -t 00:10:00

module load cuda/11.0.3

CONDA_ROOT=/nfs-mount/miniconda3

source $CONDA_ROOT/etc/profile.d/conda.sh

conda activate rapids

LOCAL_DIRECTORY=/nfs-mount/dask-local-directory

cat <>/tmp/dask-cudf-example.py

import cudf

import dask.dataframe as dd

from dask.distributed import Client

client = Client(scheduler_file="$LOCAL_DIRECTORY/dask-scheduler.json")

cdf = cudf.datasets.timeseries()

ddf = dd.from_pandas(cdf, npartitions=10)

res = ddf.groupby(['id', 'name']).agg(['mean', 'sum', 'count']).compute()

print(res)

EOF

python /tmp/dask-cudf-example.py

```

### Confirm Output

Putting the above together will result in the following output:

```bash

x y

mean sum count mean sum count

id name

1077 Laura 0.028305 1.868120 66 -0.098905 -6.527731 66

1026 Frank 0.001536 1.414839 921 -0.017223 -15.862306 921

1082 Patricia 0.072045 3.602228 50 0.081853 4.092667 50

1007 Wendy 0.009837 11.676199 1187 0.022978 27.275216 1187

976 Wendy -0.003663 -3.267674 892 0.008262 7.369577 892

... ... ... ... ... ... ...

912 Michael 0.012409 0.459119 37 0.002528 0.093520 37

1103 Ingrid -0.132714 -1.327142 10 0.108364 1.083638 10

998 Tim 0.000587 0.747745 1273 0.001777 2.262094 1273

941 Yvonne 0.050258 11.358393 226 0.080584 18.212019 226

900 Michael -0.134216 -1.073729 8 0.008701 0.069610 8

[6449 rows x 6 columns]

```

# index.html.md

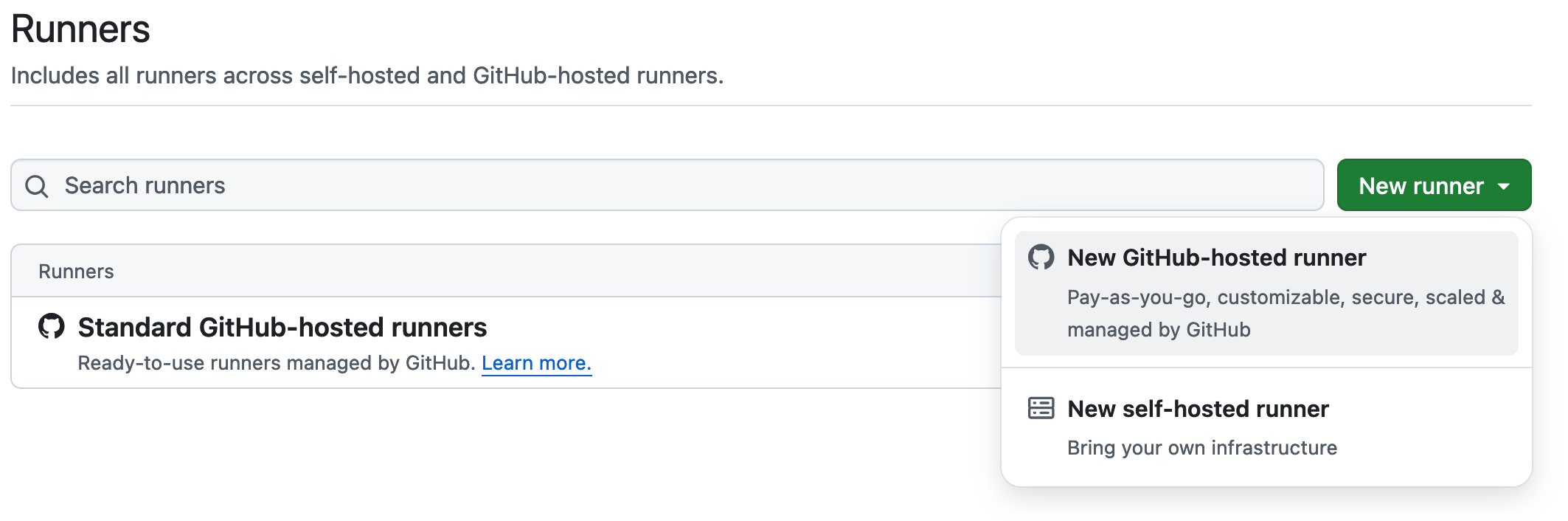

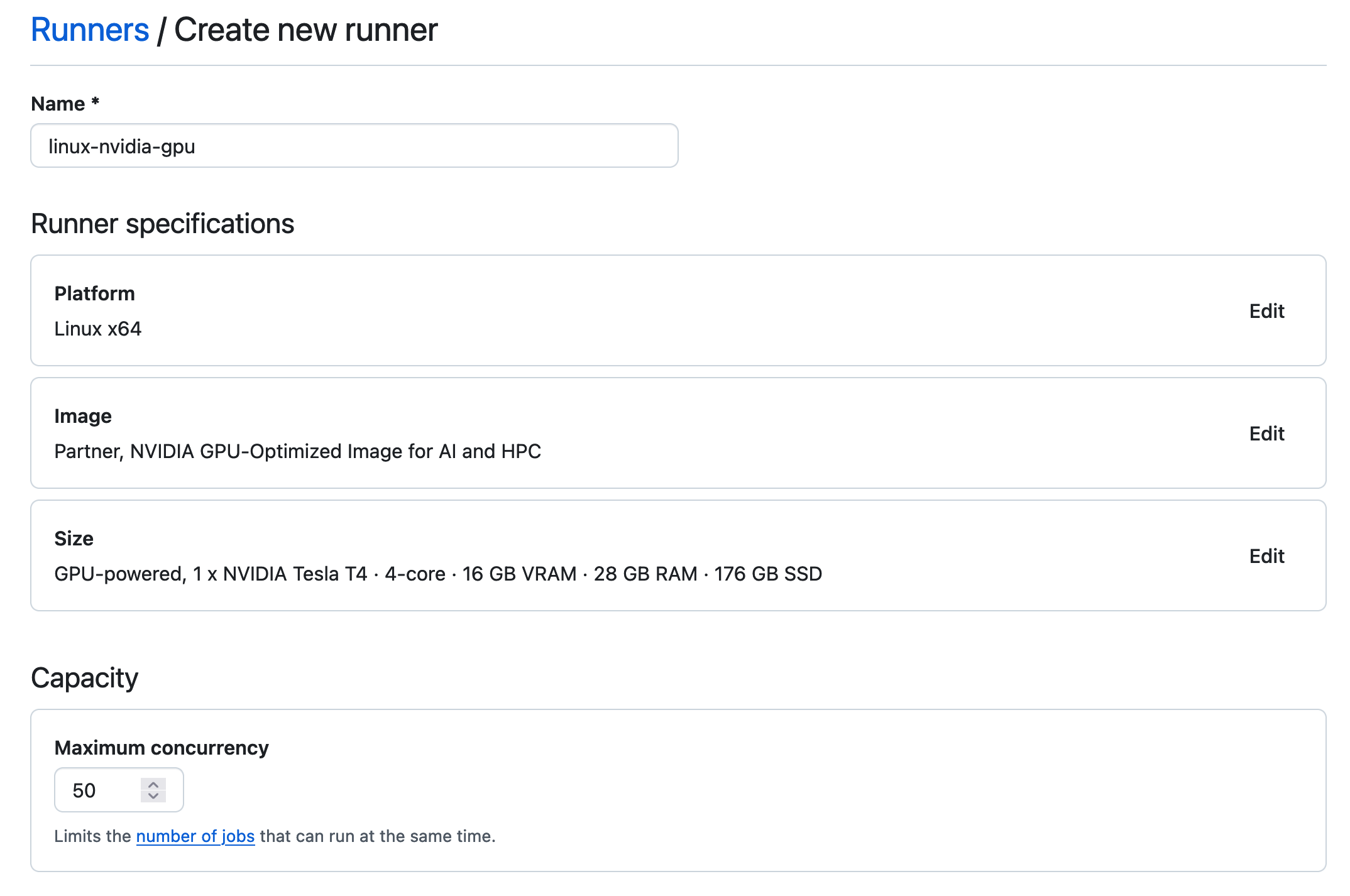

# Continuous Integration

GitHub Actions

Run tests in GitHub Actions that depend on RAPIDS and NVIDIA GPUs.

single-node

# index.html.md

# Custom RAPIDS Docker Guide

This guide provides instructions for building custom RAPIDS Docker containers. This approach allows you to select only the RAPIDS libraries you need, which is ideal for creating minimal, customizable images that can be tuned to your requirements.

#### NOTE

For quick setup with pre-built containers that include the full RAPIDS suite, please see the [Official RAPIDS Docker Installation Guide](https://docs.rapids.ai/install#docker).

## Overview

Building a custom RAPIDS container offers several advantages:

- **Minimal Image Sizes**: By including only the libraries you need, you can reduce the final image size.

- **Flexible Configuration**: You have full control over library versions and dependencies.

## Getting Started

To begin, you will need to create a few local files for your custom build: a `Dockerfile` and a configuration file (`env.yaml` for conda or `requirements.txt` for pip). The templates for these files is provided in the Docker Templates section below for you to copy.

1. **Create a Project Directory**: It’s best practice to create a dedicated folder for your custom build.

```bash

mkdir rapids-custom-build && cd rapids-custom-build

```

2. **Prepare Your Project Files**: Based on your chosen approach (conda or pip), create the necessary files in your project directory from the corresponding tab in the Docker Templates section below.

3. **Customize Your Build**:

- When using **conda**, edit your local `env.yaml` file to add the desired RAPIDS libraries.

- When using **pip**, edit your local `requirements.txt` file with your desired RAPIDS libraries.

4. **Build the Image**: Use the commands provided in the Build and Run section to create and start your custom container.

---

## Package Manager Differences

The choice of base image depends on how your package manager handles CuPy (a dependency for most RAPIDS libraries) and CUDA library dependencies:

### Conda → Uses `cuda-base`

```dockerfile

FROM nvidia/cuda:12.9.1-base-ubuntu24.04

```

This approach works because conda can install both Python and non-Python dependencies, including system-level CUDA libraries like `libcudart` and `libnvrtc`. When installing RAPIDS libraries via conda, the package manager automatically pulls the required CUDA runtime libraries alongside CuPy and other dependencies, providing complete dependency management in a single installation step.

### Pip → Uses `cuda-runtime`

```dockerfile

FROM nvidia/cuda:12.9.1-runtime-ubuntu24.04

```

This approach is necessary because CuPy wheels distributed via PyPI do not currently bundle CUDA runtime libraries (`libcudart`, `libnvrtc`) within the wheel packages themselves. Since pip cannot install system-level CUDA libraries, CuPy expects these libraries to already be present in the system environment. The `cuda-runtime` image provides the necessary CUDA runtime libraries that CuPy requires, eliminating the need for manual library installation.

## Docker Templates

The complete source code for the Dockerfiles and their configurations are included here. Choose your preferred package manager.

### conda

This method uses conda and is ideal for workflows that are based on `conda`.

**`rapids-conda.Dockerfile`**

```dockerfile

# syntax=docker/dockerfile:1

# Copyright (c) 2024-2025, NVIDIA CORPORATION.

ARG CUDA_VER=12.9.1

ARG LINUX_DISTRO=ubuntu

ARG LINUX_DISTRO_VER=24.04

FROM nvidia/cuda:${CUDA_VER}-base-${LINUX_DISTRO}${LINUX_DISTRO_VER}

SHELL ["/bin/bash", "-euo", "pipefail", "-c"]

# Install system dependencies

RUN apt-get update && \

apt-get install -y --no-install-recommends \

wget \

curl \

git \

ca-certificates \

&& rm -rf /var/lib/apt/lists/*

# Install Miniforge

RUN wget -qO /tmp/miniforge.sh "https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge3-Linux-x86_64.sh" && \

bash /tmp/miniforge.sh -b -p /opt/conda && \

rm /tmp/miniforge.sh && \

/opt/conda/bin/conda clean --all --yes

# Add conda to PATH and activate base environment

ENV PATH="/opt/conda/bin:${PATH}"

ENV CONDA_DEFAULT_ENV=base

ENV CONDA_PREFIX=/opt/conda

# Create conda group and rapids user

RUN groupadd -g 1001 conda && \

useradd -rm -d /home/rapids -s /bin/bash -g conda -u 1001 rapids && \

chown -R rapids:conda /opt/conda

USER rapids

WORKDIR /home/rapids

# Copy the environment file template

COPY --chmod=644 env.yaml /home/rapids/env.yaml

# Update the base environment with user's packages from env.yaml

# Note: The -n base flag ensures packages are installed to the base environment

# overriding any 'name:' specified in the env.yaml file

RUN /opt/conda/bin/conda env update -n base -f env.yaml && \

/opt/conda/bin/conda clean --all --yes

CMD ["bash"]

```

**`env.yaml`** (Conda environment configuration)

```yaml

name: base

channels:

- "rapidsai-nightly"

- conda-forge

- nvidia

dependencies:

- python=3.12

- cudf=25.12

```

### pip

This approach uses Python virtual environments and is ideal for workflows that are already based on `pip`.

**`rapids-pip.Dockerfile`**

```dockerfile

# syntax=docker/dockerfile:1

# Copyright (c) 2024-2025, NVIDIA CORPORATION.

ARG CUDA_VER=12.9.1

ARG PYTHON_VER=3.12

ARG LINUX_DISTRO=ubuntu

ARG LINUX_DISTRO_VER=24.04

# Use CUDA runtime image for pip

FROM nvidia/cuda:${CUDA_VER}-runtime-${LINUX_DISTRO}${LINUX_DISTRO_VER}

ARG PYTHON_VER

SHELL ["/bin/bash", "-euo", "pipefail", "-c"]

# Install system dependencies

RUN apt-get update && \

apt-get install -y --no-install-recommends \

python${PYTHON_VER} \

python${PYTHON_VER}-venv \

python3-pip \

wget \

curl \

git \

ca-certificates \

&& rm -rf /var/lib/apt/lists/*

# Create symbolic links for python and pip

RUN ln -sf /usr/bin/python${PYTHON_VER} /usr/bin/python && \

ln -sf /usr/bin/python${PYTHON_VER} /usr/bin/python3

# Create rapids user

RUN groupadd -g 1001 rapids && \

useradd -rm -d /home/rapids -s /bin/bash -g rapids -u 1001 rapids

USER rapids

WORKDIR /home/rapids

# Create and activate virtual environment

RUN python -m venv /home/rapids/venv

ENV PATH="/home/rapids/venv/bin:$PATH"

ENV VIRTUAL_ENV="/home/rapids/venv"

# Upgrade pip

RUN pip install --no-cache-dir --upgrade pip setuptools wheel

# Copy the requirements file

COPY --chmod=644 requirements.txt /home/rapids/requirements.txt

# Install all packages

RUN pip install --no-cache-dir -r requirements.txt

CMD ["bash"]

```

**`requirements.txt`** (Pip package requirements)

```text

# RAPIDS libraries (pip versions)

cudf-cu12==25.12.*,>=0.0.0a0

```

---

## Build and Run

### Conda

After copying the source files into your local directory:

```bash

# Build the image

docker build -f rapids-conda.Dockerfile -t rapids-conda-base .

# Start a container with an interactive shell

docker run --gpus all -it rapids-conda-base

```

### Pip

After copying the source files into your local directory:

```bash

# Build the image

docker build -f rapids-pip.Dockerfile -t rapids-pip-base .

# Start a container with an interactive shell

docker run --gpus all -it rapids-pip-base

```

#### IMPORTANT

When using `pip`, you must specify the CUDA version in the package name (e.g., `cudf-cu12`, `cuml-cu12`). This ensures you install the version of the library that is compatible with the CUDA toolkit.

#### NOTE

**GPU Access with `--gpus all`**: The `--gpus` flag uses the NVIDIA Container Toolkit to dynamically mount GPU device files (`/dev/nvidia*`), NVIDIA driver libraries (`libcuda.so`, `libnvidia-ml.so`), and utilities like `nvidia-smi` from the host system into your container at runtime. This is why `nvidia-smi` becomes available even though it’s not installed in your Docker image. Your container only needs to provide the CUDA runtime libraries (like `libcudart`) that RAPIDS requires—the host system’s NVIDIA driver handles the rest.

### Image Size Comparison

One of the key benefits of building custom RAPIDS containers is the significant reduction in image size compared to the pre-built RAPIDS images. Here are actual measurements from containers containing only cuDF:

| Image Type | Contents | Size |

|----------------------|-------------------|-------------|

| **Custom conda** | cuDF only | **6.83 GB** |

| **Custom pip** | cuDF only | **6.53 GB** |

| **Pre-built RAPIDS** | Full RAPIDS suite | **12.9 GB** |

Custom builds are smaller in size when you only need specific RAPIDS libraries like cuDF. These size reductions result in faster container pulls and deployments, reduced storage costs in container registries, lower bandwidth usage in distributed environments, and quicker startup times for containerized applications.

## Extending the Container

One of the benefits of building custom RAPIDS containers is the ability to easily add your own packages to the environment. You can add any combination of RAPIDS and non-RAPIDS libraries to create a fully featured container for your workloads.

### Using conda

To add packages to the Conda environment, add them to the `dependencies` list in your `env.yaml` file.

**Example: Adding `scikit-learn` and `xgboost` to a conda image containing `cudf`**

```yaml

name: base

channels:

- rapidsai-nightly

- conda-forge

- nvidia

dependencies:

- cudf=25.12

- scikit-learn

- xgboost

```

### Using pip

To add packages to the Pip environment, add them to your `requirements.txt` file.

**Example: Adding `scikit-learn` and `lightgbm` to a pip image containing `cudf`**

```text

cudf-cu12==25.12.*,>=0.0.0a0

scikit-learn

lightgbm

```

After modifying your configuration file, rebuild the Docker image. The new packages will be automatically included in your custom RAPIDS environment.

## Build Configuration

You can customize the build by modifying the version variables at the top of each Dockerfile. These variables control the CUDA version, Python version, and Linux distribution used in your container.

### Available Configuration Variables

The following variables can be modified at the top of each Dockerfile to customize your build:

| Variable | Default Value | Description | Example Values |

|-------------------------|-----------------|--------------------------------------------------------|----------------------|

| `CUDA_VER` | `12.9.1` | Sets the CUDA version for the base image and packages. | `12.0` |

| `PYTHON_VER` (pip only) | `3.12` | Defines the Python version to install and use. | `3.11`, `3.10` |

| `LINUX_DISTRO` | `ubuntu` | The Linux distribution being used | `rockylinux9`, `cm2` |

| `LINUX_DISTRO_VER` | `24.04` | The version of the Linux distribution. | `20.04` |

#### NOTE

For conda installations, you can choose the required python version in the `env.yaml` file

## Verifying Your Installation

After starting your container, you can quickly test that RAPIDS is installed and running correctly. The container launches directly into a `bash` shell where you can install the [RAPIDS CLI](https://github.com/rapidsai/rapids-cli) command line utility to verify your installation.

1. **Run the Container Interactively**

This command starts your container and drops you directly into a bash shell.

```bash

# For Conda builds

docker run --gpus all -it rapids-conda-base

# For Pip builds

docker run --gpus all -it rapids-pip-base

```

2. **Install RAPIDS CLI**

Inside the containers, install the RAPIDS CLI:

```bash

pip install rapids-cli

```

3. **Test the installation using the Doctor subcommand**

Once RAPIDS CLI is installed, you can use the `rapids doctor` subcommand to perform health checks.

```bash

rapids doctor

```

4. **Expected Output**

If your installation is successful, you will see output similar to this:

```bash

🧑⚕️ Performing REQUIRED health check for RAPIDS

Running checks

All checks passed!

```

# index.html.md

# Continuous Integration

GitHub Actions

Run tests in GitHub Actions that depend on RAPIDS and NVIDIA GPUs.

single-node

# index.html.md

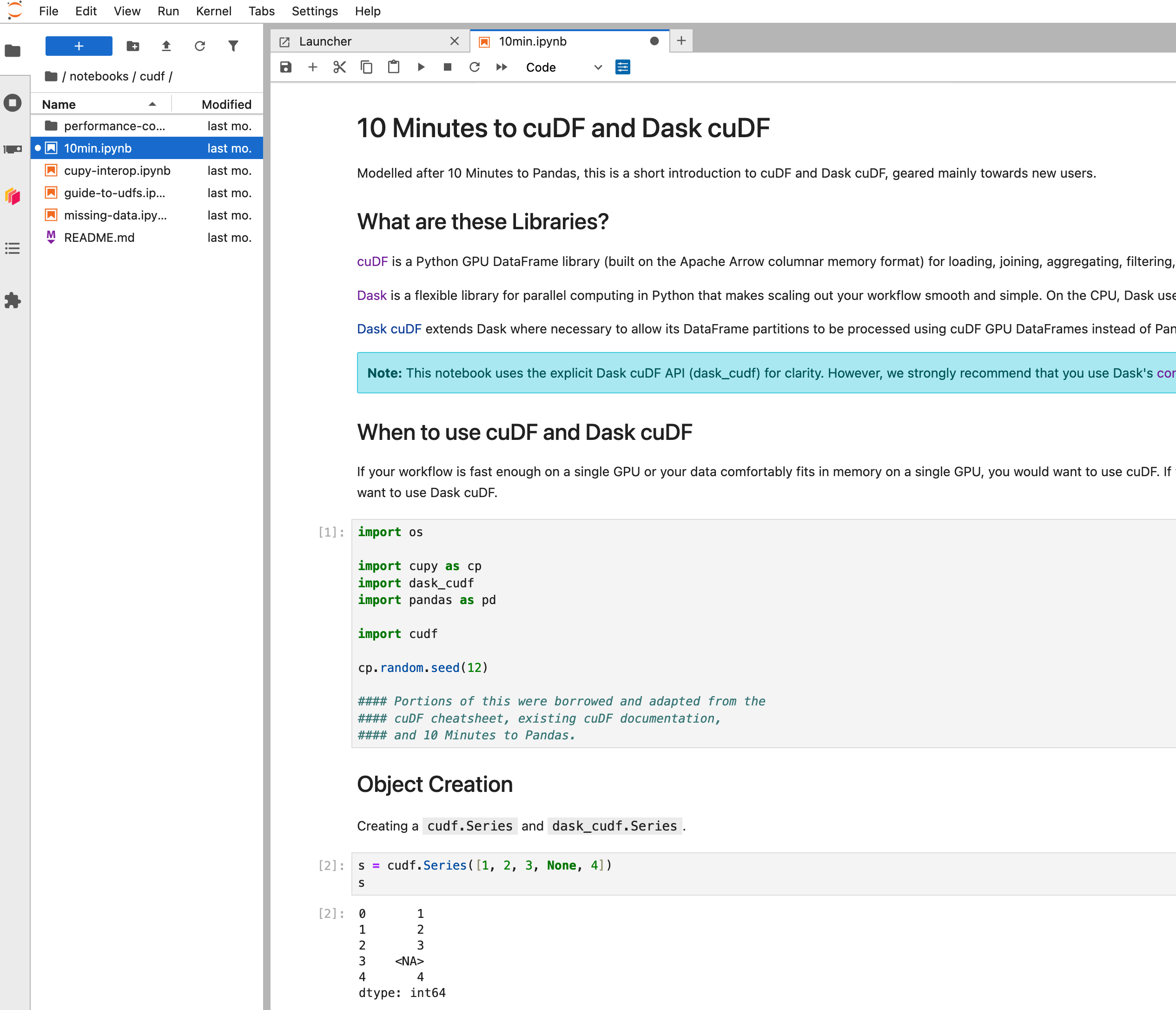

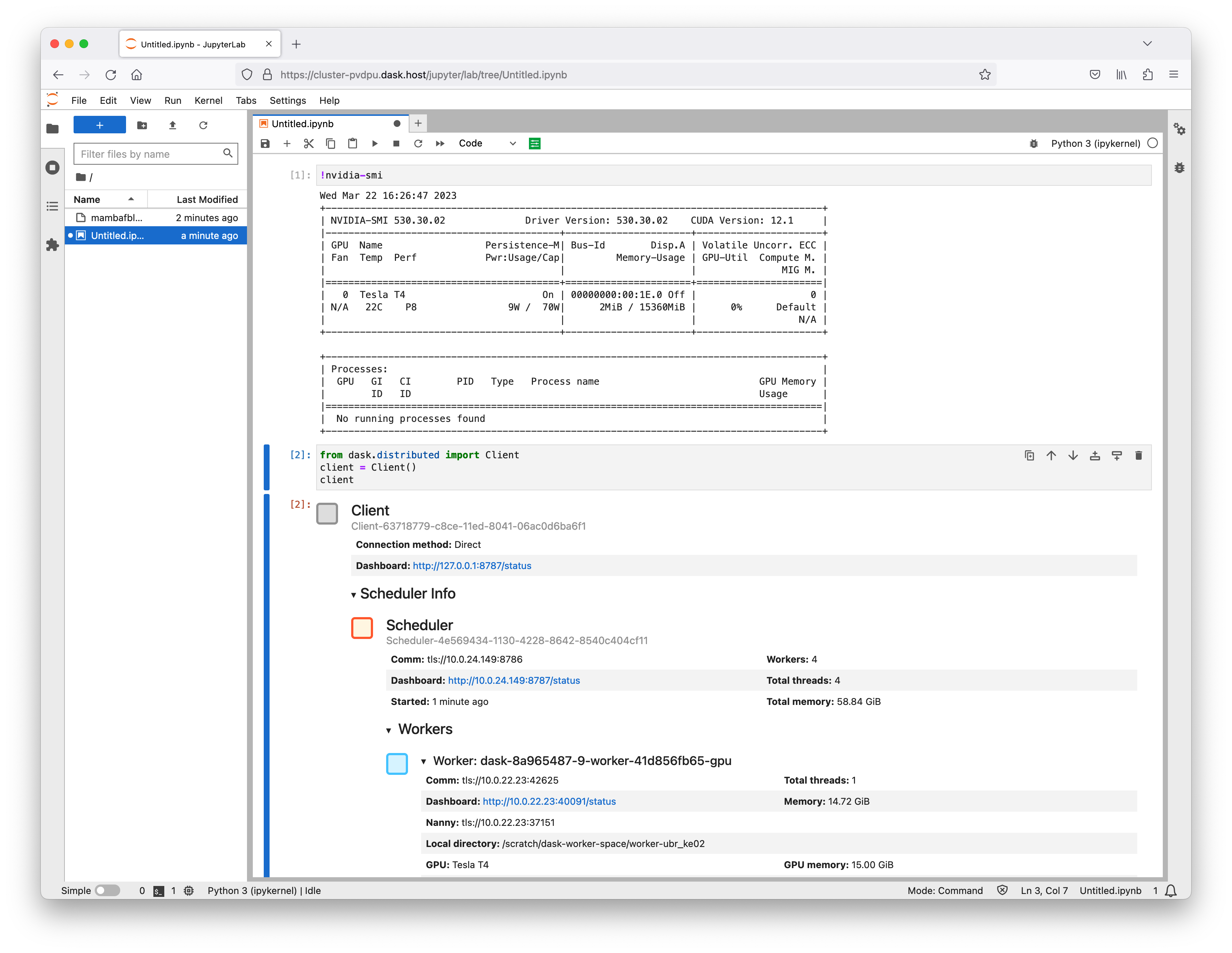

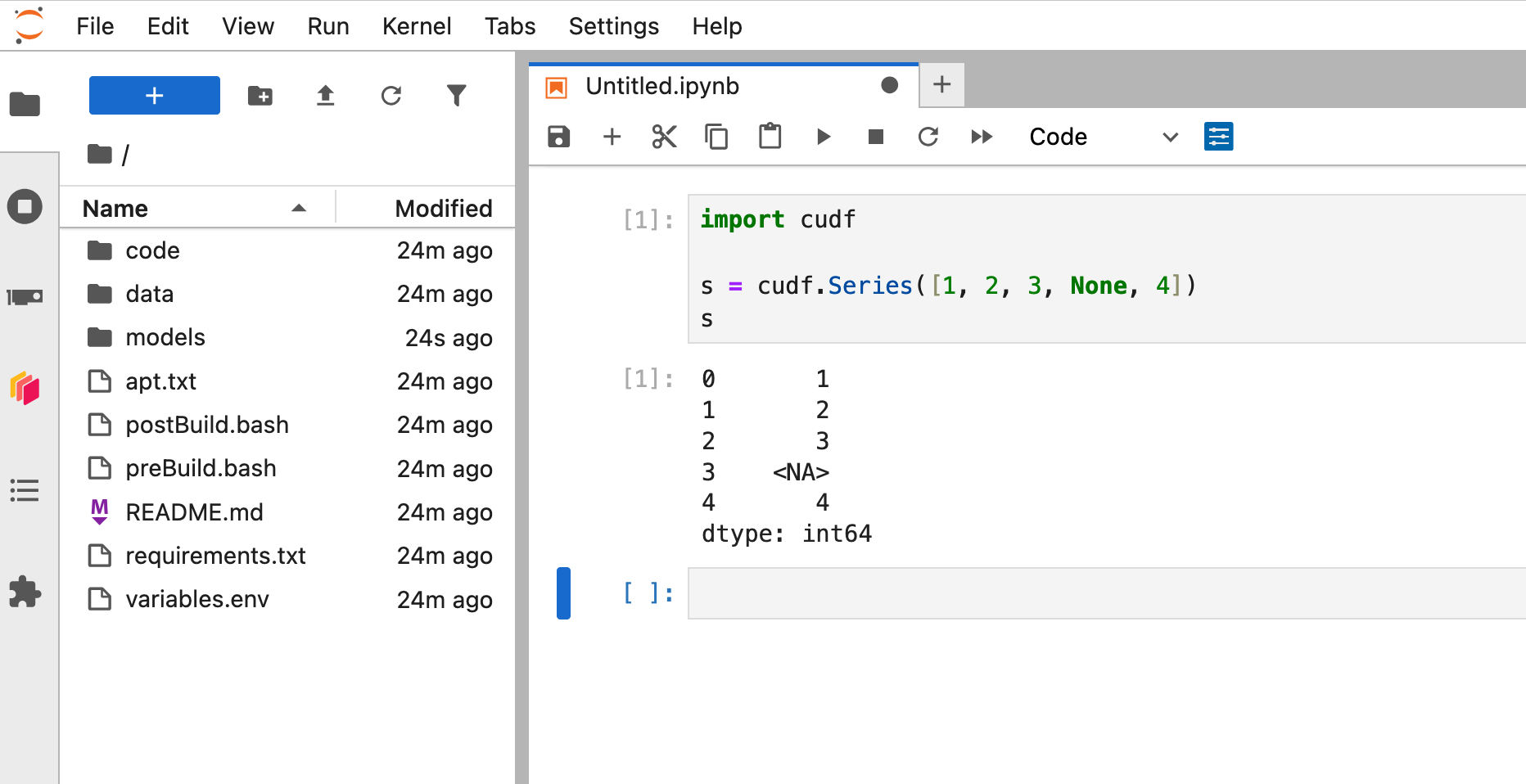

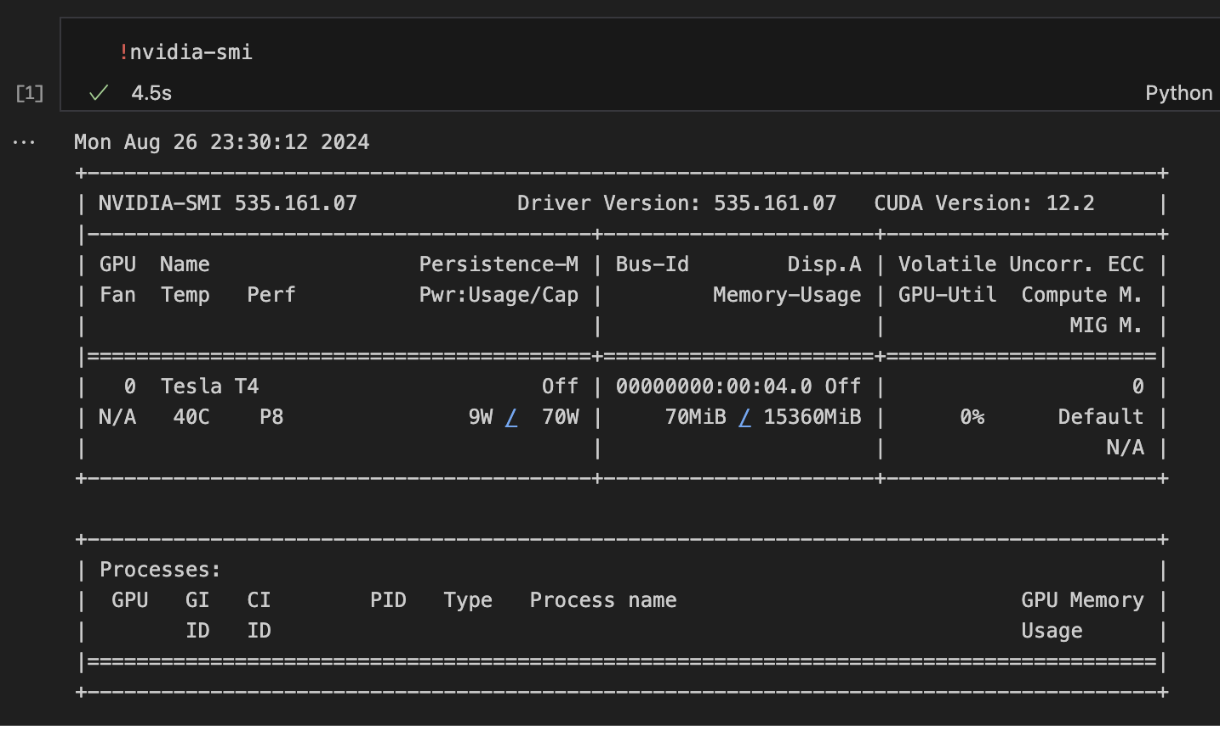

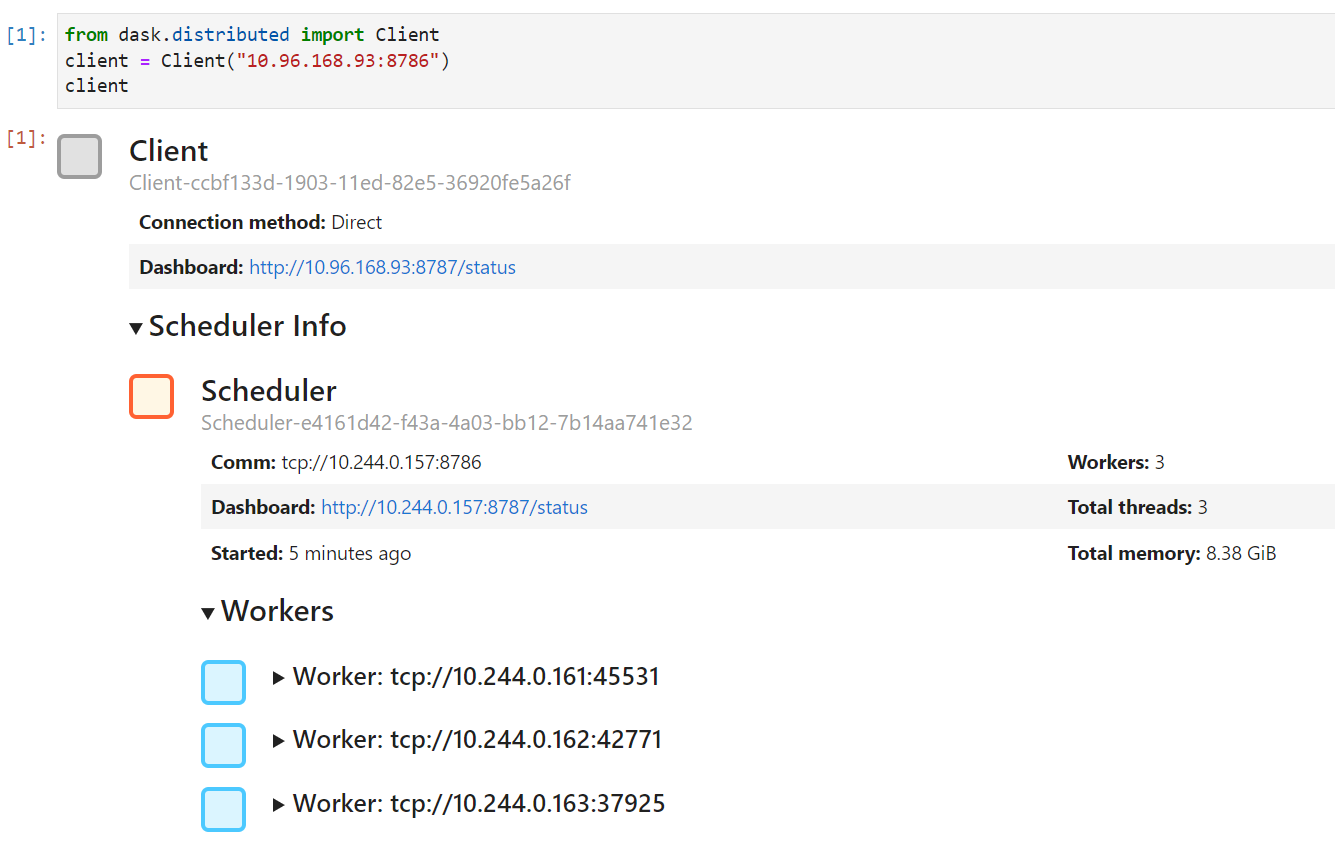

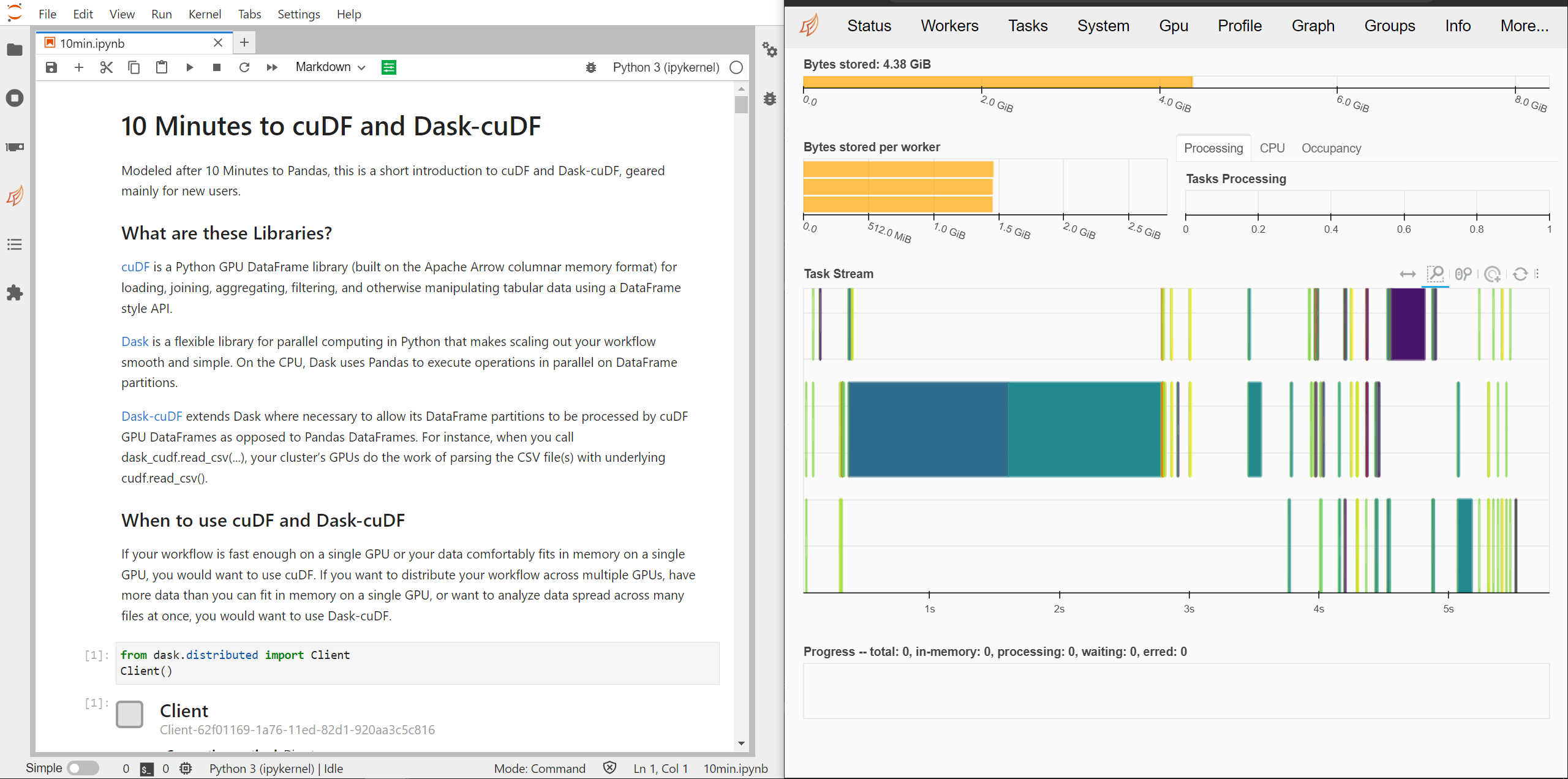

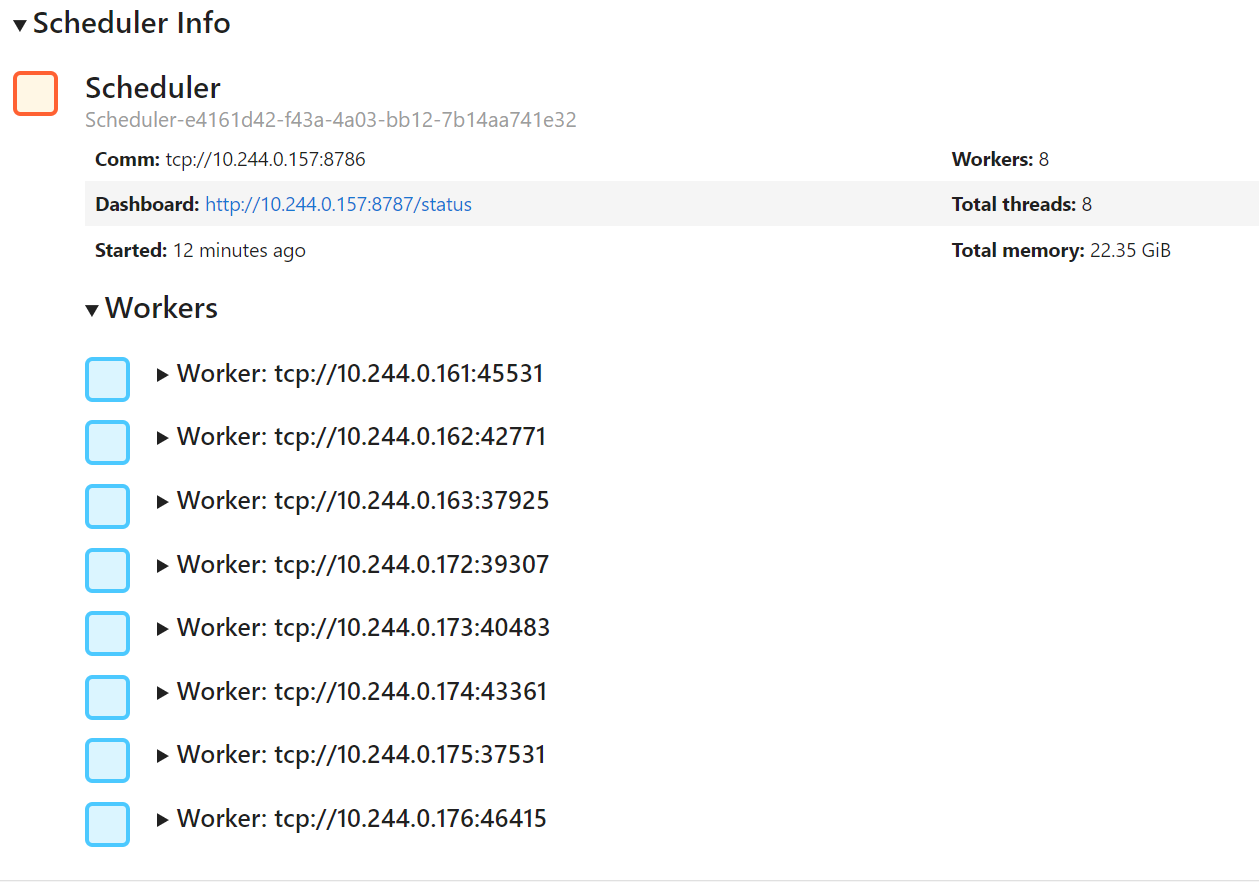

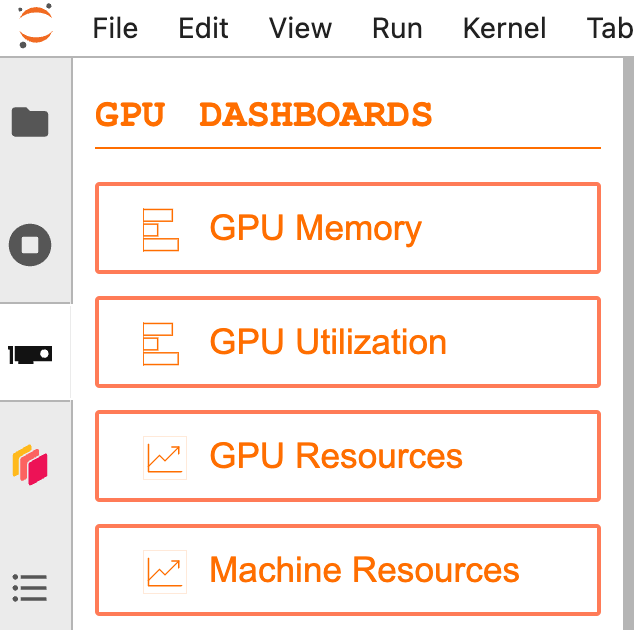

To access Jupyter, navigate to `:8888` in the browser.

In a Python notebook, check that you can import and use RAPIDS libraries like `cudf`.

```ipython

In [1]: import cudf

In [2]: df = cudf.datasets.timeseries()

In [3]: df.head()

Out[3]:

id name x y

timestamp

2000-01-01 00:00:00 1020 Kevin 0.091536 0.664482

2000-01-01 00:00:01 974 Frank 0.683788 -0.467281

2000-01-01 00:00:02 1000 Charlie 0.419740 -0.796866

2000-01-01 00:00:03 1019 Edith 0.488411 0.731661

2000-01-01 00:00:04 998 Quinn 0.651381 -0.525398

```

Open `cudf/10min.ipynb` and execute the cells to explore more of how `cudf` works.

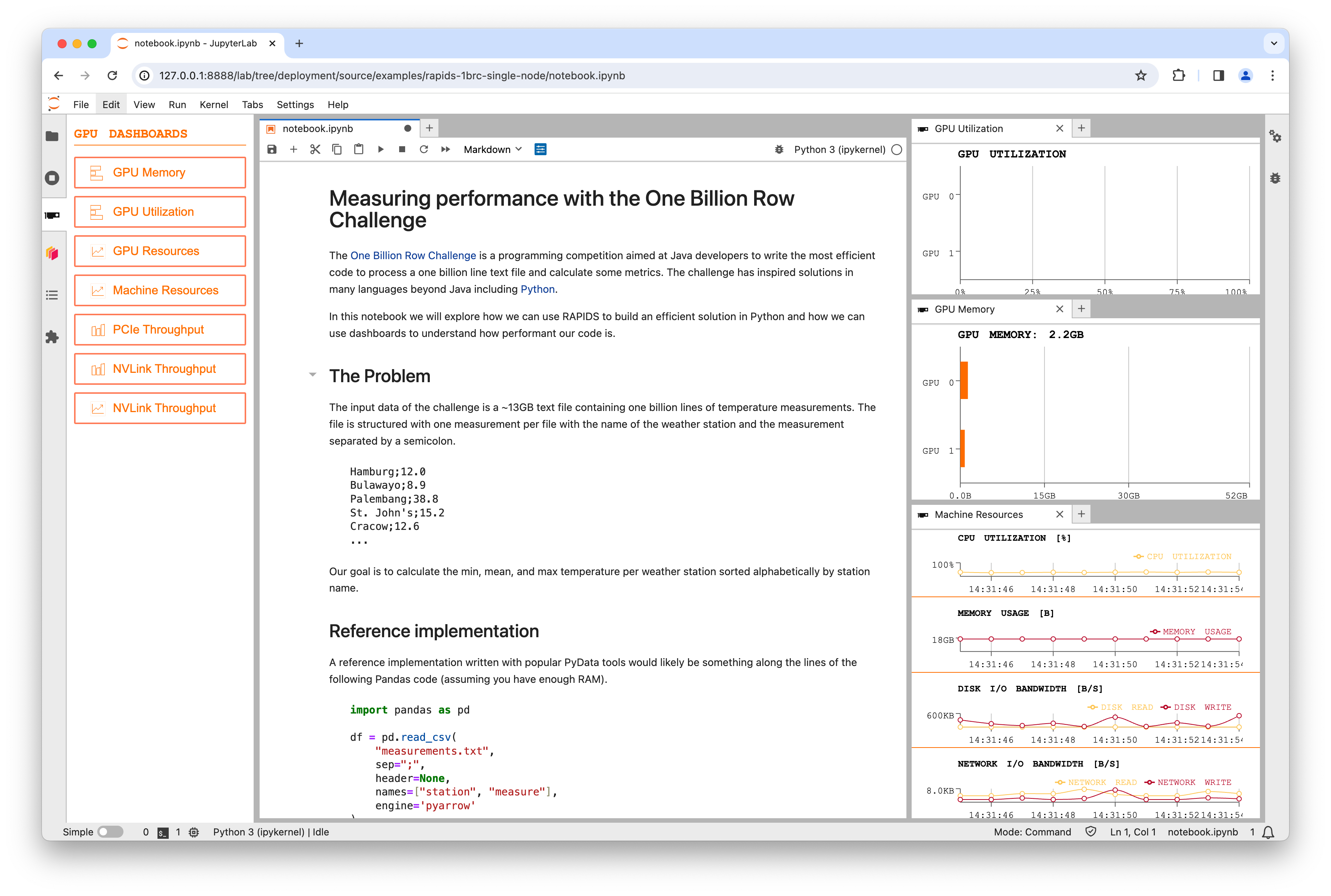

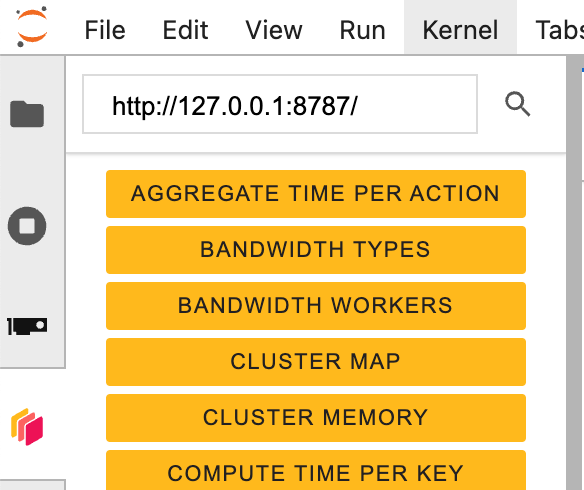

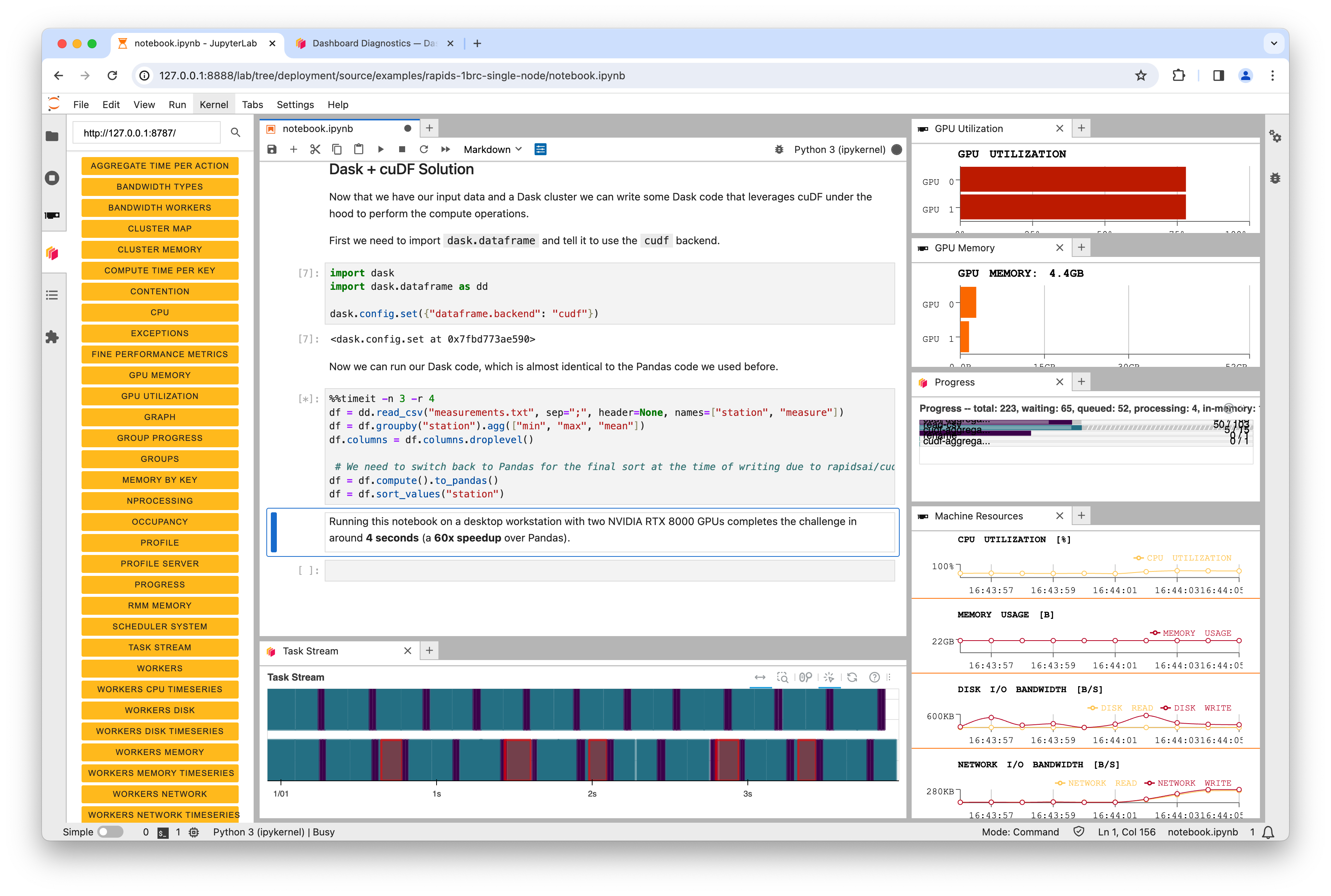

When running a Dask cluster you can also visit `:8787` to monitor the Dask cluster status.

# index.html.md

Let’s create a sample Pod that uses some GPU compute to make sure that everything is working as expected.

```bash

cat << EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: cuda-vectoradd

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vectoradd

image: "nvidia/samples:vectoradd-cuda11.6.0-ubuntu18.04"

resources:

limits:

nvidia.com/gpu: 1

EOF

```

```console

$ kubectl logs pod/cuda-vectoradd

[Vector addition of 50000 elements]

Copy input data from the host memory to the CUDA device

CUDA kernel launch with 196 blocks of 256 threads

Copy output data from the CUDA device to the host memory

Test PASSED

Done

```

If you see `Test PASSED` in the output, you can be confident that your Kubernetes cluster has GPU compute set up correctly.

Next, clean up that Pod.

```console

$ kubectl delete pod cuda-vectoradd

pod "cuda-vectoradd" deleted

```

# index.html.md

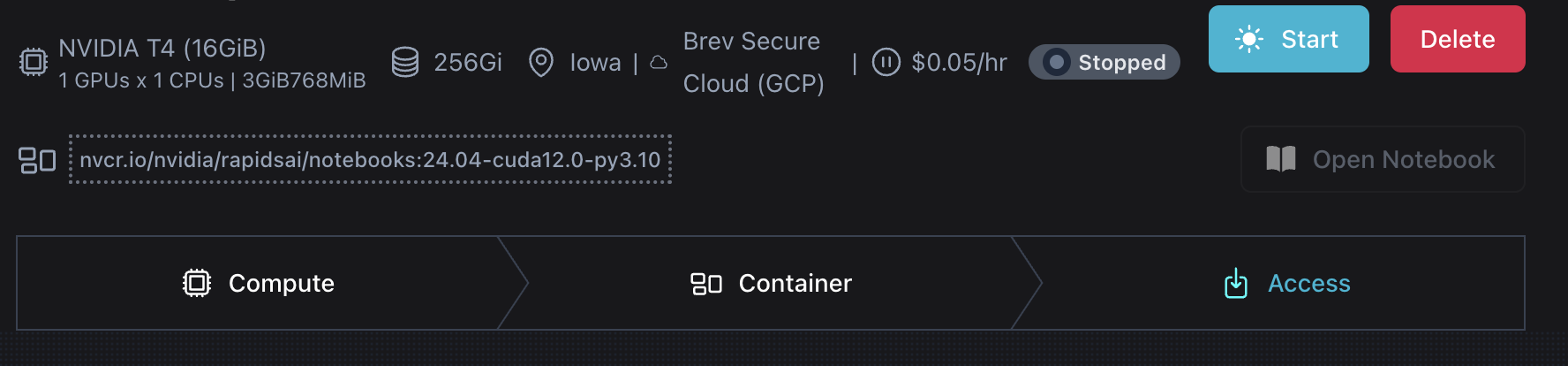

There are a selection of methods you can use to install RAPIDS which you can see via the [RAPIDS release selector](https://docs.rapids.ai/install#selector).

For this example we are going to run the RAPIDS Docker container so we need to know the name of the most recent container.

On the release selector choose **Docker** in the **Method** column.

Then copy the commands shown:

```bash

docker pull rapidsai/notebooks:25.12a-cuda12-py3.13

docker run --gpus all --rm -it \

--shm-size=1g --ulimit memlock=-1 \

-p 8888:8888 -p 8787:8787 -p 8786:8786 \

rapidsai/notebooks:25.12a-cuda12-py3.13

```

#### NOTE

If you see a “docker socket permission denied” error while running these commands try closing and reconnecting your

SSH window. This happens because your user was added to the `docker` group only after you signed in.

# index.html.md

# Continuous Integration

GitHub Actions

Run tests in GitHub Actions that depend on RAPIDS and NVIDIA GPUs.

single-node

# index.html.md

# Does the Dask scheduler need a GPU?

A common question from users deploying Dask clusters is whether the scheduler has different minimum requirements to the workers. This question is compounded when using RAPIDS and GPUs.

#### WARNING

This guide outlines our current advice on scheduler hardware requirements, but this may be subject to change.

**TLDR; It is strongly suggested that your Dask scheduler has matching hardware/software capabilities to the other components in your cluster.**

Therefore, if your workers have GPUs and the RAPIDS libraries installed we recommend that your scheduler does too. However the GPU attached to your scheduler doesn’t need to be as powerful as the GPUs on your workers, as long as it has the same capabilities and driver/CUDA versions.

## What does the scheduler use a GPU for?

The Dask client generates a task graph of operations that it wants to be performed and serializes any data that needs to be sent to the workers. The scheduler handles allocating those tasks to the various Dask workers and passes serialized data back and forth. The workers deserialize the data, perform calculations, serialize the result and pass it back.

This can lead users to logically ask if the scheduler needs the same capabilities as the workers/client. It doesn’t handle the actual data or do any of the user calculations, it just decides where work should go.

Taking this even further you could even ask “Does the Dask scheduler even need to be written in Python?”. Some folks even [experimented with a Rust implementation of the scheduler](https://github.com/It4innovations/rsds) a couple of years ago.

There are two primary reasons why we recommend that the scheduler has the same capabilities:

- There are edge cases where the scheduler does deserialize data.

- Some scheduler optimizations require high-level graphs to be pickled on the client and unpickled on the scheduler.

If your workload doesn’t trigger any edge-cases and you’re not using the high-level graph optimizations then you could likely get away with not having a GPU. But it is likely you will run into problems eventually and the failure-modes will be potentially hard to debug.

### Known edge cases

When calling [`client.submit`](https://docs.dask.org/en/latest/futures.html#distributed.Client.submit) and passing data directly to a function the whole graph is serialized and sent to the scheduler. In order for the scheduler to figure out what to do with it the graph is deserialized. If the data uses GPUs this can cause the scheduler to import RAPIDS libraries, attempt to instantiate a CUDA context and populate the data into GPU memory. If those libraries are missing and/or there are no GPUs this will cause the scheduler to fail.

Many Dask collections also have a meta object which represents the overall collection but without any data. For example a Dask Dataframe has a meta Pandas Dataframe which has the same meta properties and is used during scheduling. If the underlying data is instead a cuDF Dataframe then the meta object will be too, which is deserialized on the scheduler.

### Example failure modes

When using the default TCP communication protocol, the scheduler generally does *not* inspect data communicated between clients and workers, so many workflows will not provoke failure. For example, suppose we set up a Dask cluster and do not provide the scheduler with a GPU. The following simple computation with [CuPy](https://cupy.dev)-backed Dask arrays completes successfully

```python

import cupy

from distributed import Client, wait

import dask.array as da

client = Client(scheduler_file="scheduler.json")

x = cupy.arange(10)

y = da.arange(1000, like=x)

z = (y * 2).persist()

wait(z)

# Now let's look at some results

print(z[:10].compute())

```

We can run this code, giving the scheduler no access to a GPU:

```sh

$ CUDA_VISIBLE_DEVICES="" dask scheduler --protocol tcp --scheduler-file scheduler.json &

$ dask cuda worker --protocol tcp --scheduler-file scheduler.json &

$ python test.py

...

[ 0 2 4 6 8 10 12 14 16 18]

...

```

In contrast, if you provision an [Infiniband-enabled system](azure/infiniband.md) and wish to take advantage of the high-performance network, you will want to use the [UCX](https://openucx.org/) protocol, rather than TCP. Using such a setup without a GPU on the scheduler will not succeed. When the client or workers communicate with the scheduler, any GPU-allocated buffers will be sent directly between GPUs (avoiding a roundtrip to host memory). This is more efficient, but will not succeed if the scheduler does not *have* a GPU. Running the same example from above, but this time using UCX we obtain an error:

```sh

$ CUDA_VISIBLE_DEVICES="" dask scheduler --protocol ucx --scheduler-file scheduler.json &

$ dask cuda worker --protocol ucx --scheduler-file scheduler.json &

$ python test.py

$ CUDA_VISIBLE_DEVICES="" dask scheduler --protocol ucx --scheduler-file foo.json &

$ dask-cuda-worker --protocol ucx --scheduler-file scheduler.json &

$ python test.py

...

2023-01-27 11:01:28,263 - distributed.core - ERROR - CUDA error at: .../rmm/include/rmm/cuda_device.hpp:56: cudaErrorNoDevice no CUDA-capable device is detected

Traceback (most recent call last):

File ".../distributed/distributed/utils.py", line 741, in wrapper

return await func(*args, **kwargs)

File ".../distributed/distributed/comm/ucx.py", line 372, in read

frames = [

File ".../distributed/distributed/comm/ucx.py", line 373, in

device_array(each_size) if is_cuda else host_array(each_size)

File ".../distributed/distributed/comm/ucx.py", line 171, in device_array

return rmm.DeviceBuffer(size=n)

File "device_buffer.pyx", line 85, in rmm._lib.device_buffer.DeviceBuffer.__cinit__

RuntimeError: CUDA error at: .../rmm/include/rmm/cuda_device.hpp:56: cudaErrorNoDevice no CUDA-capable device is detected

2023-01-27 11:01:28,263 - distributed.core - ERROR - Exception while handling op gather

Traceback (most recent call last):

File ".../distributed/distributed/core.py", line 820, in _handle_comm

result = await result

File ".../distributed/distributed/scheduler.py", line 5687, in gather

data, missing_keys, missing_workers = await gather_from_workers(

File ".../distributed/distributed/utils_comm.py", line 80, in gather_from_workers

r = await c

File ".../distributed/distributed/worker.py", line 2872, in get_data_from_worker

return await retry_operation(_get_data, operation="get_data_from_worker")

File ".../distributed/distributed/utils_comm.py", line 419, in retry_operation

return await retry(

File ".../distributed/distributed/utils_comm.py", line 404, in retry

return await coro()

File ".../distributed/distributed/worker.py", line 2852, in _get_data

response = await send_recv(

File ".../distributed/distributed/core.py", line 986, in send_recv

response = await comm.read(deserializers=deserializers)

File ".../distributed/distributed/utils.py", line 741, in wrapper

return await func(*args, **kwargs)

File ".../distributed/distributed/comm/ucx.py", line 372, in read

frames = [

File ".../distributed/distributed/comm/ucx.py", line 373, in

device_array(each_size) if is_cuda else host_array(each_size)

File ".../distributed/distributed/comm/ucx.py", line 171, in device_array

return rmm.DeviceBuffer(size=n)

File "device_buffer.pyx", line 85, in rmm._lib.device_buffer.DeviceBuffer.__cinit__

RuntimeError: CUDA error at: .../rmm/include/rmm/cuda_device.hpp:56: cudaErrorNoDevice no CUDA-capable device is detected

Traceback (most recent call last):

File "test.py", line 15, in

print(z[:10].compute())

File ".../dask/dask/base.py", line 314, in compute

(result,) = compute(self, traverse=False, **kwargs)

File ".../dask/dask/base.py", line 599, in compute

results = schedule(dsk, keys, **kwargs)

File ".../distributed/distributed/client.py", line 3144, in get

results = self.gather(packed, asynchronous=asynchronous, direct=direct)

File ".../distributed/distributed/client.py", line 2313, in gather

return self.sync(

File ".../distributed/distributed/utils.py", line 338, in sync

return sync(

File ".../distributed/distributed/utils.py", line 405, in sync

raise exc.with_traceback(tb)

File ".../distributed/distributed/utils.py", line 378, in f

result = yield future

File ".../tornado/gen.py", line 769, in run

value = future.result()

File ".../distributed/distributed/client.py", line 2205, in _gather

response = await future

File ".../distributed/distributed/client.py", line 2256, in _gather_remote

response = await retry_operation(self.scheduler.gather, keys=keys)

File ".../distributed/distributed/utils_comm.py", line 419, in retry_operation

return await retry(

File ".../distributed/distributed/utils_comm.py", line 404, in retry

return await coro()

File ".../distributed/distributed/core.py", line 1221, in send_recv_from_rpc

return await send_recv(comm=comm, op=key, **kwargs)

File ".../distributed/distributed/core.py", line 1011, in send_recv

raise exc.with_traceback(tb)

File ".../distributed/distributed/core.py", line 820, in _handle_comm

result = await result

File ".../distributed/distributed/scheduler.py", line 5687, in gather

data, missing_keys, missing_workers = await gather_from_workers(

File ".../distributed/distributed/utils_comm.py", line 80, in gather_from_workers

r = await c

File ".../distributed/distributed/worker.py", line 2872, in get_data_from_worker

return await retry_operation(_get_data, operation="get_data_from_worker")

File ".../distributed/distributed/utils_comm.py", line 419, in retry_operation

return await retry(

File ".../distributed/distributed/utils_comm.py", line 404, in retry

return await coro()

File ".../distributed/distributed/worker.py", line 2852, in _get_data

response = await send_recv(

File ".../distributed/distributed/core.py", line 986, in send_recv

response = await comm.read(deserializers=deserializers)

File ".../distributed/distributed/utils.py", line 741, in wrapper

return await func(*args, **kwargs)

File ".../distributed/distributed/comm/ucx.py", line 372, in read

frames = [

File ".../distributed/distributed/comm/ucx.py", line 373, in

device_array(each_size) if is_cuda else host_array(each_size)

File ".../distributed/distributed/comm/ucx.py", line 171, in device_array

return rmm.DeviceBuffer(size=n)

File "device_buffer.pyx", line 85, in rmm._lib.device_buffer.DeviceBuffer.__cinit__

RuntimeError: CUDA error at: .../rmm/include/rmm/cuda_device.hpp:56: cudaErrorNoDevice no CUDA-capable device is detected

...

```

The critical error comes from [RMM](https://docs.rapids.ai/api/rmm/nightly/), we’re attempting to allocate a [`DeviceBuffer`](https://docs.rapids.ai/api/rmm/nightly/basics.html#devicebuffers) on the scheduler, but there is no GPU available to do so:

```pytb

File ".../distributed/distributed/comm/ucx.py", line 171, in device_array

return rmm.DeviceBuffer(size=n)

File "device_buffer.pyx", line 85, in rmm._lib.device_buffer.DeviceBuffer.__cinit__

RuntimeError: CUDA error at: .../rmm/include/rmm/cuda_device.hpp:56: cudaErrorNoDevice no CUDA-capable device is detected

```

### Scheduler optimizations and High-Level graphs

The Dask community is actively working on implementing high-level graphs which will both speed up client -> scheduler communication and allow the scheduler to make advanced optimizations such as predicate pushdown.

Much effort has been put into using existing serialization strategies to communicate the HLG but this has proven prohibitively difficult to implement. The current plan is to simplify HighLevelGraph/Layer so that the entire HLG can be pickled on the client, sent to the scheduler as a single binary blob, and then unpickled/materialized (HLG->dict) on the scheduler. The problem with this new plan is that the pickle/un-pickle convention will require the scheduler to have the same environment as the client. If any Layer logic also requires a device allocation, then this approach also requires the scheduler to have access to a GPU.

## So what are the minimum requirements of the scheduler?

From a software perspective we recommend that the Python environment on the client, scheduler and workers all match. Given that the user is expected to ensure the worker has the same environment as the client it is not much of a burden to ensure the scheduler also has the same environment.

From a hardware perspective we recommend that the scheduler has the same capabilities, but not necessarily the same quantity of resource. Therefore if the workers have one or more GPUs we recommend that the scheduler has access to one GPU with matching NVIDIA driver and CUDA versions. In a large multi-node cluster deployment on a cloud platform this may mean the workers are launched on VMs with 8 GPUs and the scheduler is launched on a smaller VM with one GPU. You could also select a less powerful GPU such as those intended for inferencing for your scheduler like a T4, provided it has the same CUDA capabilities, NVIDIA driver version and CUDA/CUDA Toolkit version.

This balance means we can guarantee things function as intended, but reduces cost because placing the scheduler on an 8 GPU node would be a waste of resources.

# index.html.md

# Colocate Dask workers on Kubernetes while using nodes with multiple GPUs

To optimize performance when working with nodes that have multiple GPUs, a best practice is to schedule Dask workers in a tightly grouped manner, thereby minimizing communication overhead between worker pods. This guide provides a step-by-step process for adding Pod affinities to worker pods ensuring they are scheduled together as much as possible on Google Kubernetes Engine (GKE), but the principles can be adapted for use with other Kubernetes distributions.

## Prerequisites

First you’ll need to have the [`gcloud` CLI tool](https://cloud.google.com/sdk/gcloud) installed along with [`kubectl`](https://kubernetes.io/docs/tasks/tools/), [`helm`](https://helm.sh/docs/intro/install/), etc for managing Kubernetes.

Ensure you are logged into the `gcloud` CLI.

```bash

$ gcloud init

```

## Create the Kubernetes cluster

Now we can launch a GPU enabled GKE cluster.

```bash

$ gcloud container clusters create rapids-gpu \

--accelerator type=nvidia-tesla-a100,count=2 --machine-type a2-highgpu-2g \

--zone us-central1-c --release-channel stable

```

With this command, you’ve launched a GKE cluster called `rapids-gpu`. You’ve specified that it should use nodes of type

a2-highgpu-2g, each with two A100 GPUs.

## Install drivers

Next, [install the NVIDIA drivers](https://cloud.google.com/kubernetes-engine/docs/how-to/gpus#installing_drivers) onto each node.

```console

$ kubectl apply -f https://raw.githubusercontent.com/GoogleCloudPlatform/container-engine-accelerators/master/nvidia-driver-installer/cos/daemonset-preloaded-latest.yaml

daemonset.apps/nvidia-driver-installer created

```

Verify that the NVIDIA drivers are successfully installed.

```console

$ kubectl get po -A --watch | grep nvidia

kube-system nvidia-driver-installer-6zwcn 1/1 Running 0 8m47s

kube-system nvidia-driver-installer-8zmmn 1/1 Running 0 8m47s

kube-system nvidia-driver-installer-mjkb8 1/1 Running 0 8m47s

kube-system nvidia-gpu-device-plugin-5ffkm 1/1 Running 0 13m

kube-system nvidia-gpu-device-plugin-d599s 1/1 Running 0 13m

kube-system nvidia-gpu-device-plugin-jrgjh 1/1 Running 0 13m

```

After your drivers are installed, you are ready to test your cluster.

Let’s create a sample Pod that uses some GPU compute to make sure that everything is working as expected.

```bash

cat << EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: cuda-vectoradd

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vectoradd

image: "nvidia/samples:vectoradd-cuda11.6.0-ubuntu18.04"

resources:

limits:

nvidia.com/gpu: 1

EOF

```

```console

$ kubectl logs pod/cuda-vectoradd

[Vector addition of 50000 elements]

Copy input data from the host memory to the CUDA device

CUDA kernel launch with 196 blocks of 256 threads

Copy output data from the CUDA device to the host memory

Test PASSED

Done

```

If you see `Test PASSED` in the output, you can be confident that your Kubernetes cluster has GPU compute set up correctly.

Next, clean up that Pod.

```console

$ kubectl delete pod cuda-vectoradd

pod "cuda-vectoradd" deleted

```

### Installing Dask operator with Helm

The operator has a Helm chart which can be used to manage the installation of the operator. Follow the instructions provided in the [Dask documentation](https://kubernetes.dask.org/en/latest/installing.html#installing-with-helm), or alternatively can be installed via:

```console

$ helm install --create-namespace -n dask-operator --generate-name --repo https://helm.dask.org dask-kubernetes-operator

NAME: dask-kubernetes-operator-1666875935

NAMESPACE: dask-operator

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Operator has been installed successfully.

```

## Configuring a RAPIDS `DaskCluster`

To configure the `DaskCluster` resource to run RAPIDS you need to set a few things:

- The container image must contain RAPIDS, the [official RAPIDS container images](https://docs.rapids.ai/install/#docker) are a good choice for this.

- The Dask workers must be configured with one or more NVIDIA GPU resources.

- The worker command must be set to `dask-cuda-worker`.

## Creating a RAPIDS `DaskCluster` using `kubectl`

Here is an example resource manifest for launching a RAPIDS Dask cluster with worker Pod affinity

```yaml

# rapids-dask-cluster.yaml

apiVersion: kubernetes.dask.org/v1

kind: DaskCluster

metadata:

name: rapids-dask-cluster

labels:

dask.org/cluster-name: rapids-dask-cluster

spec:

worker:

replicas: 2

spec:

containers:

- name: worker

image: "rapidsai/base:25.12a-cuda12-py3.13"

imagePullPolicy: "IfNotPresent"

args:

- dask-cuda-worker

- --name

- $(DASK_WORKER_NAME)

resources:

limits:

nvidia.com/gpu: "1"

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: dask.org/component

operator: In

values:

- worker

topologyKey: kubernetes.io/hostname

scheduler:

spec:

containers:

- name: scheduler

image: "rapidsai/base:25.12a-cuda12-py3.13"

imagePullPolicy: "IfNotPresent"

env:

args:

- dask-scheduler

ports:

- name: tcp-comm

containerPort: 8786

protocol: TCP

- name: http-dashboard

containerPort: 8787

protocol: TCP

readinessProbe:

httpGet:

port: http-dashboard

path: /health

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

httpGet:

port: http-dashboard

path: /health

initialDelaySeconds: 15

periodSeconds: 20

resources:

limits:

nvidia.com/gpu: "1"

service:

type: ClusterIP

selector:

dask.org/cluster-name: rapids-dask-cluster

dask.org/component: scheduler

ports:

- name: tcp-comm

protocol: TCP

port: 8786

targetPort: "tcp-comm"

- name: http-dashboard

protocol: TCP

port: 8787

targetPort: "http-dashboard"

```

You can create this cluster with `kubectl`.

```bash

$ kubectl apply -f rapids-dask-cluster.yaml

```

### Manifest breakdown

Most of this manifest is explained in the [Dask Operator](https://docs.rapids.ai/deployment/stable/tools/kubernetes/dask-operator/#example-using-kubecluster) documentation in the tools section of the RAPIDS documentation.

The only addition made to the example from the above documentation page is the following section in the worker configuration

```yaml

# ...

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: dask.org/component

operator: In

values:

- worker

topologyKey: kubernetes.io/hostname

# ...

```

For the Dask Worker Pod configuration, we are setting a Pod affinity using the name of the node as the topology key. [Pod affinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#inter-pod-affinity-and-anti-affinity) in Kubernetes allows you to constrain which nodes the Pod can be scheduled on and allows you to configure a set of workloads that should be co-located in the same defined topology, in this case, preferring to place two worker pods on the same node. This is also intended to be a soft requirement as we are using the `preferredDuringSchedulingIgnoredDuringExecution` type of Pod affinity. The Kubernetes scheduler tries to find a node which meets the rule. If a matching node is not available, the Kubernetes scheduler still schedules the Pod on any available node. This ensures that you will not face any issues with the Dask cluster even if placing worker pods on nodes already in use is not possible.

### Accessing your Dask cluster

Once you have created your `DaskCluster` resource we can use `kubectl` to check the status of all the other resources it created for us.

```console

$ kubectl get all -l dask.org/cluster-name=rapids-dask-cluster -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/rapids-dask-cluster-default-worker-12a055b2db-7b5bf8f66c-9mb59 1/1 Running 0 2s 10.244.2.3 gke-rapids-gpu-1-default-pool-d85b49-2545

pod/rapids-dask-cluster-default-worker-34437735ae-6fdd787f75-sdqzg 1/1 Running 0 2s 10.244.2.4 gke-rapids-gpu-1-default-pool-d85b49-2545

pod/rapids-dask-cluster-scheduler-6656cb88f6-cgm4t 0/1 Running 0 3s 10.244.3.3 gke-rapids-gpu-1-default-pool-d85b49-2f31

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/rapids-dask-cluster-scheduler ClusterIP 10.96.231.110 8786/TCP,8787/TCP 3s dask.org/cluster-name=rapids-dask-cluster,dask.org/component=scheduler

```

Here you can see our scheduler Pod and two worker pods along with the scheduler service. The two worker pods are placed in the same node as desired, while the scheduler Pod is placed on a different node.

If you have a Python session running within the Kubernetes cluster (like the [example one on the Kubernetes page](../platforms/kubernetes.md)) you should be able

to connect a Dask distributed client directly.

```python

from dask.distributed import Client

client = Client("rapids-dask-cluster-scheduler:8786")

```

Alternatively if you are outside of the Kubernetes cluster you can change the `Service` to use [`LoadBalancer`](https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancer) or [`NodePort`](https://kubernetes.io/docs/concepts/services-networking/service/#type-nodeport) or use `kubectl` to port forward the connection locally.

```console

$ kubectl port-forward svc/rapids-dask-cluster-service 8786:8786

Forwarding from 127.0.0.1:8786 -> 8786

```

```python

from dask.distributed import Client

client = Client("localhost:8786")

```

## Example using `KubeCluster`

In addition to creating clusters via `kubectl` you can also do so from Python with [`dask_kubernetes.operator.KubeCluster`](https://kubernetes.dask.org/en/latest/operator_kubecluster.html#dask_kubernetes.operator.KubeCluster). This class implements the Dask Cluster Manager interface and under the hood creates and manages the `DaskCluster` resource for you. You can also generate a spec with make_cluster_spec() which KubeCluster uses internally and then modify it with your custom options. We will use this to add node affinity to the scheduler.

In the following example, the same cluster configuration as the `kubectl` example is used.

```python

from dask_kubernetes.operator import KubeCluster, make_cluster_spec

spec = make_cluster_spec(

name="rapids-dask-cluster",

image="rapidsai/base:25.12a-cuda12-py3.13",

n_workers=2,

resources={"limits": {"nvidia.com/gpu": "1"}},

worker_command="dask-cuda-worker",

)

```

To add the node affinity to the worker, you can create a custom dictionary specifying the type of Pod affinity and the topology key.

```python

affinity_config = {

"podAffinity": {

"preferredDuringSchedulingIgnoredDuringExecution": [

{

"weight": 100,

"podAffinityTerm": {

"labelSelector": {

"matchExpressions": [

{

"key": "dask.org/component",

"operator": "In",

"values": ["worker"],

}

]

},

"topologyKey": "kubernetes.io/hostname",

},

}

]

}

}

```

Now you can add this configuration to the spec created in the previous step, and create the Dask cluster using this custom spec.

```python

spec["spec"]["worker"]["spec"]["affinity"] = affinity_config

cluster = KubeCluster(custom_cluster_spec=spec)

```

If we check with `kubectl` we can see the above Python generated the same `DaskCluster` resource as the `kubectl` example above.

```console

$ kubectl get daskclusters

NAME AGE

rapids-dask-cluster 3m28s

$ kubectl get all -l dask.org/cluster-name=rapids-dask-cluster -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/rapids-dask-cluster-default-worker-12a055b2db-7b5bf8f66c-9mb59 1/1 Running 0 2s 10.244.2.3 gke-rapids-gpu-1-default-pool-d85b49-2545

pod/rapids-dask-cluster-default-worker-34437735ae-6fdd787f75-sdqzg 1/1 Running 0 2s 10.244.2.4 gke-rapids-gpu-1-default-pool-d85b49-2545

pod/rapids-dask-cluster-scheduler-6656cb88f6-cgm4t 0/1 Running 0 3s 10.244.3.3 gke-rapids-gpu-1-default-pool-d85b49-2f31

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/rapids-dask-cluster-scheduler ClusterIP 10.96.231.110 8786/TCP,8787/TCP 3s dask.org/cluster-name=rapids-dask-cluster,dask.org/component=scheduler

```

With this cluster object in Python we can also connect a client to it directly without needing to know the address as Dask will discover that for us. It also automatically sets up port forwarding if you are outside of the Kubernetes cluster.

```python

from dask.distributed import Client

client = Client(cluster)

```

This object can also be used to scale the workers up and down.

```python

cluster.scale(5)

```

And to manually close the cluster.

```python

cluster.close()

```

#### NOTE

By default the `KubeCluster` command registers an exit hook so when the Python process exits the cluster is deleted automatically. You can disable this by setting `KubeCluster(..., shutdown_on_close=False)` when launching the cluster.

This is useful if you have a multi-stage pipeline made up of multiple Python processes and you want your Dask cluster to persist between them.

You can also connect a `KubeCluster` object to your existing cluster with `cluster = KubeCluster.from_name(name="rapids-dask")` if you wish to use the cluster or manually call `cluster.close()` in the future.

# index.html.md

# GPU optimization for the Dask scheduler on Kubernetes

An optimization users can make while deploying Dask clusters is to ensure that the scheduler is placed on a node with a less powerful GPU to reduce overall cost. [This previous guide](https://docs.rapids.ai/deployment/stable/guides/scheduler-gpu-requirements/) explains why the scheduler needs access to the same environment as the workers, as there are a few edge cases where the scheduler does serialize data and unpickles high-level graphs.

#### WARNING

This guide outlines our current advice on scheduler hardware requirements, but this may be subject to change.

However, when working with nodes with multiple GPUs, placing the scheduler on one of these nodes would be a waste of resources. This guide walks through the steps to create a Kubernetes cluster on GKE along with a nodepool of less powerful Nvidia Tesla T4 GPUs and placing the scheduler on this node using Kubernetes node affinity.

## Prerequisites

First you’ll need to have the [`gcloud` CLI tool](https://cloud.google.com/sdk/gcloud) installed along with [`kubectl`](https://kubernetes.io/docs/tasks/tools/), [`helm`](https://helm.sh/docs/intro/install/), etc for managing Kubernetes.

Ensure you are logged into the `gcloud` CLI.

```bash

$ gcloud init

```

## Create the Kubernetes cluster

Now we can launch a GPU enabled GKE cluster.

```bash

$ gcloud container clusters create rapids-gpu \

--accelerator type=nvidia-tesla-a100,count=2 --machine-type a2-highgpu-2g \

--zone us-central1-c --release-channel stable

```

With this command, you’ve launched a GKE cluster called `rapids-gpu`. You’ve specified that it should use nodes of type

a2-highgpu-2g, each with two A100 GPUs.

## Create the dedicated nodepool for the scheduler

Now create a new nodepool on this GPU cluster.

```bash

$ gcloud container node-pools create scheduler-pool --cluster rapids-gpu \

--accelerator type=nvidia-tesla-t4,count=1 --machine-type n1-standard-2 \

--num-nodes 1 --node-labels dedicated=scheduler --zone us-central1-c

```

With this command, you’ve created an additional nodepool called `scheduler-pool` with 1 node. You’ve also specified that it should use a node of type n1-standard-2, with one T4 GPU.

We also add a Kubernetes label `dedicated=scheduled` to the node in this nodepool which will be used to place the scheduler onto this node.

## Install drivers

Next, [install the NVIDIA drivers](https://cloud.google.com/kubernetes-engine/docs/how-to/gpus#installing_drivers) onto each node.

```console

$ kubectl apply -f https://raw.githubusercontent.com/GoogleCloudPlatform/container-engine-accelerators/master/nvidia-driver-installer/cos/daemonset-preloaded-latest.yaml

daemonset.apps/nvidia-driver-installer created

```

Verify that the NVIDIA drivers are successfully installed.

```console

$ kubectl get po -A --watch | grep nvidia

kube-system nvidia-driver-installer-6zwcn 1/1 Running 0 8m47s

kube-system nvidia-driver-installer-8zmmn 1/1 Running 0 8m47s

kube-system nvidia-driver-installer-mjkb8 1/1 Running 0 8m47s

kube-system nvidia-gpu-device-plugin-5ffkm 1/1 Running 0 13m

kube-system nvidia-gpu-device-plugin-d599s 1/1 Running 0 13m

kube-system nvidia-gpu-device-plugin-jrgjh 1/1 Running 0 13m

```

After your drivers are installed, you are ready to test your cluster.

Let’s create a sample Pod that uses some GPU compute to make sure that everything is working as expected.

```bash

cat << EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: cuda-vectoradd

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vectoradd

image: "nvidia/samples:vectoradd-cuda11.6.0-ubuntu18.04"

resources:

limits:

nvidia.com/gpu: 1

EOF

```

```console

$ kubectl logs pod/cuda-vectoradd

[Vector addition of 50000 elements]

Copy input data from the host memory to the CUDA device

CUDA kernel launch with 196 blocks of 256 threads

Copy output data from the CUDA device to the host memory

Test PASSED

Done

```

If you see `Test PASSED` in the output, you can be confident that your Kubernetes cluster has GPU compute set up correctly.

Next, clean up that Pod.

```console

$ kubectl delete pod cuda-vectoradd

pod "cuda-vectoradd" deleted

```

### Installing Dask operator with Helm

The operator has a Helm chart which can be used to manage the installation of the operator. The chart is published in the [Dask Helm Repo](https://helm.dask.org) repository, and can be installed via:

```console

$ helm repo add dask https://helm.dask.org

"dask" has been added to your repositories

```

```console

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "dask" chart repository

Update Complete. ⎈Happy Helming!⎈

```

```console

$ helm install --create-namespace -n dask-operator --generate-name dask/dask-kubernetes-operator

NAME: dask-kubernetes-operator-1666875935

NAMESPACE: dask-operator

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Operator has been installed successfully.

```

Then you should be able to list your Dask clusters via `kubectl`.

```console

$ kubectl get daskclusters

No resources found in default namespace.

```

We can also check the operator Pod is running:

```console

$ kubectl get pods -A -l app.kubernetes.io/name=dask-kubernetes-operator

NAMESPACE NAME READY STATUS RESTARTS AGE

dask-operator dask-kubernetes-operator-775b8bbbd5-zdrf7 1/1 Running 0 74s

```

## Configuring a RAPIDS `DaskCluster`

To configure the `DaskCluster` resource to run RAPIDS you need to set a few things:

- The container image must contain RAPIDS, the [official RAPIDS container images](https://docs.rapids.ai/install/#docker) are a good choice for this.

- The Dask workers must be configured with one or more NVIDIA GPU resources.

- The worker command must be set to `dask-cuda-worker`.

## Creating a RAPIDS `DaskCluster` using `kubectl`

Here is an example resource manifest for launching a RAPIDS Dask cluster with the scheduler optimization

```yaml

# rapids-dask-cluster.yaml

apiVersion: kubernetes.dask.org/v1

kind: DaskCluster

metadata:

name: rapids-dask-cluster

labels:

dask.org/cluster-name: rapids-dask-cluster

spec:

worker:

replicas: 2

spec:

containers:

- name: worker

image: "rapidsai/base:25.12a-cuda12-py3.13"

imagePullPolicy: "IfNotPresent"

args:

- dask-cuda-worker

- --name

- $(DASK_WORKER_NAME)

resources:

limits:

nvidia.com/gpu: "1"

scheduler:

spec:

containers:

- name: scheduler

image: "rapidsai/base:25.12a-cuda12-py3.13"

imagePullPolicy: "IfNotPresent"

env:

args:

- dask-scheduler

ports:

- name: tcp-comm

containerPort: 8786

protocol: TCP

- name: http-dashboard

containerPort: 8787

protocol: TCP

readinessProbe:

httpGet:

port: http-dashboard

path: /health

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

httpGet:

port: http-dashboard

path: /health

initialDelaySeconds: 15

periodSeconds: 20

resources:

limits:

nvidia.com/gpu: "1"

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: dedicated

operator: In

values:

- scheduler

service:

type: ClusterIP

selector:

dask.org/cluster-name: rapids-dask-cluster

dask.org/component: scheduler

ports:

- name: tcp-comm

protocol: TCP

port: 8786

targetPort: "tcp-comm"

- name: http-dashboard

protocol: TCP

port: 8787

targetPort: "http-dashboard"

```

You can create this cluster with `kubectl`.

```bash

$ kubectl apply -f rapids-dask-cluster.yaml

```

### Manifest breakdown

Most of this manifest is explained in the [Dask Operator](https://docs.rapids.ai/deployment/stable/tools/kubernetes/dask-operator/#example-using-kubecluster) documentation in the tools section of the RAPIDS documentation.

The only addition made to the example from the above documentation page is the following section in the scheduler configuration

```yaml

# ...

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: dedicated

operator: In

values:

- scheduler

# ...

```

For the Dask scheduler Pod we are setting a node affinity using the label previously specified on the dedicated node. Node affinity in Kubernetes allows you to constrain which nodes your Pod can be scheduled based on node labels. This is also intended to be a soft requirement as we are using the `preferredDuringSchedulingIgnoredDuringExecution` type of node affinity. The Kubernetes scheduler tries to find a node which meets the rule. If a matching node is not available, the Kubernetes scheduler still schedules the Pod on any available node. This ensures that you will not face any issues with the Dask cluster even if the T4 node is unavailable.

### Accessing your Dask cluster

Once you have created your `DaskCluster` resource we can use `kubectl` to check the status of all the other resources it created for us.

```console

$ kubectl get all -l dask.org/cluster-name=rapids-dask-cluster

NAME READY STATUS RESTARTS AGE

pod/rapids-dask-cluster-default-worker-group-worker-0c202b85fd 1/1 Running 0 4m13s

pod/rapids-dask-cluster-default-worker-group-worker-ff5d376714 1/1 Running 0 4m13s

pod/rapids-dask-cluster-scheduler 1/1 Running 0 4m14s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/rapids-dask-cluster-service ClusterIP 10.96.223.217 8786/TCP,8787/TCP 4m13s

```

Here you can see our scheduler Pod and two worker Pods along with the scheduler service.

If you have a Python session running within the Kubernetes cluster (like the [example one on the Kubernetes page](../platforms/kubernetes.md)) you should be able

to connect a Dask distributed client directly.

```python

from dask.distributed import Client

client = Client("rapids-dask-cluster-scheduler:8786")

```

Alternatively if you are outside of the Kubernetes cluster you can change the `Service` to use [`LoadBalancer`](https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancer) or [`NodePort`](https://kubernetes.io/docs/concepts/services-networking/service/#type-nodeport) or use `kubectl` to port forward the connection locally.

```console

$ kubectl port-forward svc/rapids-dask-cluster-service 8786:8786

Forwarding from 127.0.0.1:8786 -> 8786

```

```python

from dask.distributed import Client

client = Client("localhost:8786")

```

## Example using `KubeCluster`

In addition to creating clusters via `kubectl` you can also do so from Python with [`dask_kubernetes.operator.KubeCluster`](https://kubernetes.dask.org/en/latest/operator_kubecluster.html#dask_kubernetes.operator.KubeCluster). This class implements the Dask Cluster Manager interface and under the hood creates and manages the `DaskCluster` resource for you. You can also generate a spec with `make_cluster_spec()` which KubeCluster uses internally and then modify it with your custom options. We will use this to add node affinity to the scheduler.

```python

from dask_kubernetes.operator import KubeCluster, make_cluster_spec

spec = make_cluster_spec(

name="rapids-dask-cluster",

image="rapidsai/base:25.12a-cuda12-py3.13",

n_workers=2,

resources={"limits": {"nvidia.com/gpu": "1"}},

worker_command="dask-cuda-worker",

)

```

To add the node affinity to the scheduler, you can create a custom dictionary specifying the type of node affinity and the label of the node.

```python

affinity_config = {

"nodeAffinity": {

"preferredDuringSchedulingIgnoredDuringExecution": [

{

"weight": 100,

"preference": {

"matchExpressions": [

{"key": "dedicated", "operator": "In", "values": ["scheduler"]}

]

},

}

]

}

}

```

Now you can add this configuration to the spec created in the previous step, and create the Dask cluster using this custom spec.

```python

spec["spec"]["scheduler"]["spec"]["affinity"] = affinity_config

cluster = KubeCluster(custom_cluster_spec=spec)

```

If we check with `kubectl` we can see the above Python generated the same `DaskCluster` resource as the `kubectl` example above.

```console

$ kubectl get daskclusters

NAME AGE

rapids-dask-cluster 3m28s

$ kubectl get all -l dask.org/cluster-name=rapids-dask-cluster

NAME READY STATUS RESTARTS AGE

pod/rapids-dask-cluster-default-worker-group-worker-07d674589a 1/1 Running 0 3m30s

pod/rapids-dask-cluster-default-worker-group-worker-a55ed88265 1/1 Running 0 3m30s

pod/rapids-dask-cluster-scheduler 1/1 Running 0 3m30s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/rapids-dask-cluster-service ClusterIP 10.96.200.202 8786/TCP,8787/TCP 3m30s

```

With this cluster object in Python we can also connect a client to it directly without needing to know the address as Dask will discover that for us. It also automatically sets up port forwarding if you are outside of the Kubernetes cluster.

```python

from dask.distributed import Client

client = Client(cluster)

```

This object can also be used to scale the workers up and down.

```python

cluster.scale(5)

```

And to manually close the cluster.

```python

cluster.close()

```

#### NOTE

By default the `KubeCluster` command registers an exit hook so when the Python process exits the cluster is deleted automatically. You can disable this by setting `KubeCluster(..., shutdown_on_close=False)` when launching the cluster.

This is useful if you have a multi-stage pipeline made up of multiple Python processes and you want your Dask cluster to persist between them.

You can also connect a `KubeCluster` object to your existing cluster with `cluster = KubeCluster.from_name(name="rapids-dask")` if you wish to use the cluster or manually call `cluster.close()` in the future.

# index.html.md

# Continuous Integration

GitHub Actions

Run tests in GitHub Actions that depend on RAPIDS and NVIDIA GPUs.

single-node

# index.html.md

# Caching Docker Images For Autoscaling Workloads

The [Dask Autoscaler](https://kubernetes.dask.org/en/latest/operator_resources.html#daskautoscaler) leverages Dask’s adaptive mode and allows the scheduler to scale the number of workers up and down based on the task graph.

When scaling the Dask cluster up or down, there is no guarantee that newly created worker Pods will be scheduled on the same node as previously removed workers. As a result, when a new node is allocated for a worker Pod, the cluster will incur a pull penalty due to the need to download the Docker image.

## Using a Daemonset to cache images

To guarantee that each node runs a consistent workload, we will deploy a Kubernetes [DaemonSet](https://kubernetes.io/docs/concepts/workloads/controllers/daemonset/) utilizing the RAPIDS image. This DaemonSet will prevent Dask worker Pods created from this image from entering a pending state when tasks are scheduled.

This is an example manifest to deploy a Daemonset with the RAPIDS container.

```yaml

#caching-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: prepuller

namespace: image-cache

spec:

selector:

matchLabels:

name: prepuller

template:

metadata:

labels:

name: prepuller

spec:

initContainers:

- name: prepuller-1

image: "rapidsai/base:25.12a-cuda12-py3.13"

command: ["sh", "-c", "'true'"]

containers:

- name: pause

image: gcr.io/google_containers/pause:3.2

resources:

limits:

cpu: 1m

memory: 8Mi

requests:

cpu: 1m

memory: 8Mi

```

You can create this Daemonset with `kubectl`.

```bash

$ kubectl apply -f caching-daemonset.yaml

```

The DaemonSet is deployed in the `image-cache` namespace. In the `initContainers` section, we specify the image to be pulled and cached within the cluster, utilizing any executable command that terminates successfully. Additionally, the `pause` container is used to ensure the Pod transitions into a Running state without consuming resources or running any processes.

When deploying the DaemonSet, after all pre-puller Pods are running successfully, you can confirm that the images have been cached across all nodes in the cluster. As the Kubernetes cluster is scaled up or down, the DaemonSet will automatically pull and cache the necessary images on any newly added nodes, ensuring consistent image availability throughout

# index.html.md

# Building RAPIDS containers from a custom base image

This guide provides instructions to add RAPIDS and CUDA to your existing Docker images. This approach allows you to integrate RAPIDS libraries into containers that must start from a specific base image, such as application-specific containers.

The CUDA installation steps are sourced from the official [NVIDIA CUDA Container Images Repository](https://gitlab.com/nvidia/container-images/cuda).

#### WARNING

We strongly recommend that you use the official CUDA container images published by NVIDIA. This guide is intended for those extreme situations where you cannot use the CUDA images as the base and need to manually install CUDA components on your containers. This approach introduces significant complexity and potential issues that can be difficult to debug. We cannot provide support for users beyond what is on this page.

If you have the flexibility to choose your base image, see the [Custom RAPIDS Docker Guide](../custom-docker.md) which starts from NVIDIA’s official CUDA images for a simpler setup.

## Overview

If you cannot use NVIDIA’s CUDA container images, you will need to manually install CUDA components in your existing Docker image. The components you need depends on the package manager used to install RAPIDS:

- **For conda installations**: You need the components from the NVIDIA `base` CUDA images

- **For pip installations**: You need the components from the NVIDIA `runtime` CUDA images

## Understanding CUDA Image Components

NVIDIA provides three tiers of CUDA container images, each building on the previous:

### Base Components (Required for RAPIDS on conda)

The **base** images provide the minimal CUDA runtime environment:

| Component | Package Name | Purpose |

|--------------------|----------------|---------------------------------------------------|

| CUDA Runtime | `cuda-cudart` | Core CUDA runtime library (`libcudart.so`) |

| CUDA Compatibility | `cuda-compat` | Forward compatibility libraries for older drivers |

### Runtime Components (Required for RAPIDS on pip)

The **runtime** images include all the base components plus additional CUDA packages such as:

| Component | Package Name | Purpose |

|-------------------------------|------------------|----------------------------------------------------|

| **All Base Components** | (see above) | Core CUDA runtime |

| CUDA Libraries | `cuda-libraries` | Comprehensive CUDA library collection |

| CUDA Math Libraries | `libcublas` | Basic Linear Algebra Subprograms (BLAS) |

| NVIDIA Performance Primitives | `libnpp` | Image, signal and video processing primitives |

| Sparse Matrix Library | `libcusparse` | Sparse matrix operations |

| Profiling Tools | `cuda-nvtx` | NVIDIA Tools Extension for profiling |

| Communication Library | `libnccl2` | Multi-GPU and multi-node collective communications |

### Development Components (Optional)

The **devel** images add development tools to runtime images such as:

- CUDA development headers and static libraries

- CUDA compiler (`nvcc`)

- Debugger and profiler tools

- Additional development utilities

#### NOTE

Development components are typically not needed for RAPIDS usage unless you plan to compile CUDA code within your container. For the complete and up to date list of runtime and devel components, see the respective Dockerfiles in the [NVIDIA CUDA Container Images Repository](https://gitlab.com/nvidia/container-images/cuda/-/tree/master/dist).

## Getting the Right Components for Your Setup

The [NVIDIA CUDA Container Images repository](https://gitlab.com/nvidia/container-images/cuda) contains a `dist/` directory with pre-built Dockerfiles organized by CUDA version, Linux distribution, and container type (base, runtime, devel).

### Supported Distributions

CUDA components are available for most popular Linux distributions. For the complete and current list of supported distributions for your desired version, check the repository linked above.

### Key Differences by Distribution Type

**Ubuntu/Debian distributions:**

- Use `apt-get install` commands

- Repository setup uses GPG keys and `.list` files

**RHEL/CentOS/Rocky Linux distributions:**

- Use `yum install` or `dnf install` commands

- Repository setup uses `.repo` configuration files

- Include repository files: `cuda.repo-x86_64`, `cuda.repo-arm64`

### Installing CUDA components on your container

1. Navigate to `dist/{cuda_version}/{your_os}/base/` or `runtime/` in the [repository](https://gitlab.com/nvidia/container-images/cuda)

2. Open the `Dockerfile` for your target distribution

3. Copy all `ENV` variables for package versioning and NVIDIA Container Toolkit support (see the Essential Environment Variables section below)

4. Copy the `RUN` commands for installing the packages

5. If you are using the `runtime` components, make sure to copy the `ENV` and `RUN` commands from the `base` Dockerfile as well

6. For RHEL-based systems, also copy any `.repo` configuration files needed

#### NOTE

Package versions change between CUDA releases. Always check the specific Dockerfile for your desired CUDA version and distribution to get the correct versions.

### Installing RAPIDS libraries on your container

Refer to the Docker Templates in the [Custom RAPIDS Docker Guide](../custom-docker.md) to configure your RAPIDS installation, adding the conda or pip installation commands after the CUDA components are installed.

## Essential Environment Variables

These environment variables are **required** when building CUDA containers, as they control GPU access and CUDA functionality through the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/docker-specialized.html)

| Variable | Purpose |

|------------------------------|----------------------------------|

| `NVIDIA_VISIBLE_DEVICES` | Specifies which GPUs are visible |

| `NVIDIA_DRIVER_CAPABILITIES` | Required driver capabilities |

| `NVIDIA_REQUIRE_CUDA` | Driver version constraints |

| `PATH` | Include CUDA binaries |

| `LD_LIBRARY_PATH` | Include CUDA libraries |

## Complete Integration Examples

Here are complete examples showing how to build a RAPIDS container with CUDA 12.9.1 components on an Ubuntu 24.04 base image:

### conda

### RAPIDS with conda (Base Components)

Create an `env.yaml` file alongside your Dockerfile with your desired RAPIDS packages following the configuration described in the [Custom RAPIDS Docker Guide](../custom-docker.md). Set the `TARGETARCH` build argument to match your target architecture (`amd64` for x86_64 or `arm64` for ARM processors).

```dockerfile

FROM ubuntu:24.04

# Build arguments

ARG TARGETARCH=amd64

# Architecture detection and setup

ENV NVARCH=${TARGETARCH/amd64/x86_64}

ENV NVARCH=${NVARCH/arm64/sbsa}

SHELL ["/bin/bash", "-euo", "pipefail", "-c"]

# NVIDIA Repository Setup (Ubuntu 24.04)

RUN apt-get update && apt-get install -y --no-install-recommends \

gnupg2 curl ca-certificates && \

curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/${NVARCH}/3bf863cc.pub | apt-key add - && \

echo "deb https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/${NVARCH} /" > /etc/apt/sources.list.d/cuda.list && \

apt-get purge --autoremove -y curl && \

rm -rf /var/lib/apt/lists/*

# CUDA Base Package Versions (from CUDA 12.9.1 base image)

ENV NV_CUDA_CUDART_VERSION=12.9.79-1

ENV CUDA_VERSION=12.9.1

# NVIDIA driver constraints

ENV NVIDIA_REQUIRE_CUDA="cuda>=12.9 brand=unknown,driver>=535,driver<536 brand=grid,driver>=535,driver<536 brand=tesla,driver>=535,driver<536 brand=nvidia,driver>=535,driver<536 brand=quadro,driver>=535,driver<536 brand=quadrortx,driver>=535,driver<536 brand=nvidiartx,driver>=535,driver<536 brand=vapps,driver>=535,driver<536 brand=vpc,driver>=535,driver<536 brand=vcs,driver>=535,driver<536 brand=vws,driver>=535,driver<536 brand=cloudgaming,driver>=535,driver<536 brand=unknown,driver>=550,driver<551 brand=grid,driver>=550,driver<551 brand=tesla,driver>=550,driver<551 brand=nvidia,driver>=550,driver<551 brand=quadro,driver>=550,driver<551 brand=quadrortx,driver>=550,driver<551 brand=nvidiartx,driver>=550,driver<551 brand=vapps,driver>=550,driver<551 brand=vpc,driver>=550,driver<551 brand=vcs,driver>=550,driver<551 brand=vws,driver>=550,driver<551 brand=cloudgaming,driver>=550,driver<551 brand=unknown,driver>=560,driver<561 brand=grid,driver>=560,driver<561 brand=tesla,driver>=560,driver<561 brand=nvidia,driver>=560,driver<561 brand=quadro,driver>=560,driver<561 brand=quadrortx,driver>=560,driver<561 brand=nvidiartx,driver>=560,driver<561 brand=vapps,driver>=560,driver<561 brand=vpc,driver>=560,driver<561 brand=vcs,driver>=560,driver<561 brand=vws,driver>=560,driver<561 brand=cloudgaming,driver>=560,driver<561 brand=unknown,driver>=565,driver<566 brand=grid,driver>=565,driver<566 brand=tesla,driver>=565,driver<566 brand=nvidia,driver>=565,driver<566 brand=quadro,driver>=565,driver<566 brand=quadrortx,driver>=565,driver<566 brand=nvidiartx,driver>=565,driver<566 brand=vapps,driver>=565,driver<566 brand=vpc,driver>=565,driver<566 brand=vcs,driver>=565,driver<566 brand=vws,driver>=565,driver<566 brand=cloudgaming,driver>=565,driver<566 brand=unknown,driver>=570,driver<571 brand=grid,driver>=570,driver<571 brand=tesla,driver>=570,driver<571 brand=nvidia,driver>=570,driver<571 brand=quadro,driver>=570,driver<571 brand=quadrortx,driver>=570,driver<571 brand=nvidiartx,driver>=570,driver<571 brand=vapps,driver>=570,driver<571 brand=vpc,driver>=570,driver<571 brand=vcs,driver>=570,driver<571 brand=vws,driver>=570,driver<571 brand=cloudgaming,driver>=570,driver<571"

# Install Base CUDA Components (from base image)

RUN apt-get update && apt-get install -y --no-install-recommends \

cuda-cudart-12-9=${NV_CUDA_CUDART_VERSION} \

cuda-compat-12-9 && \

rm -rf /var/lib/apt/lists/*

# CUDA Environment Configuration

ENV PATH=/usr/local/cuda/bin:${PATH}

ENV LD_LIBRARY_PATH=/usr/local/cuda/lib64

# NVIDIA Container Runtime Configuration

ENV NVIDIA_VISIBLE_DEVICES=all

ENV NVIDIA_DRIVER_CAPABILITIES=compute,utility

# Required for nvidia-docker v1

RUN echo "/usr/local/cuda/lib64" >> /etc/ld.so.conf.d/nvidia.conf

# Install system dependencies

RUN apt-get update && \

apt-get install -y --no-install-recommends \

wget \

curl \

git \

ca-certificates \

&& rm -rf /var/lib/apt/lists/*

# Install Miniforge